Diffuse reflection UV computation tool

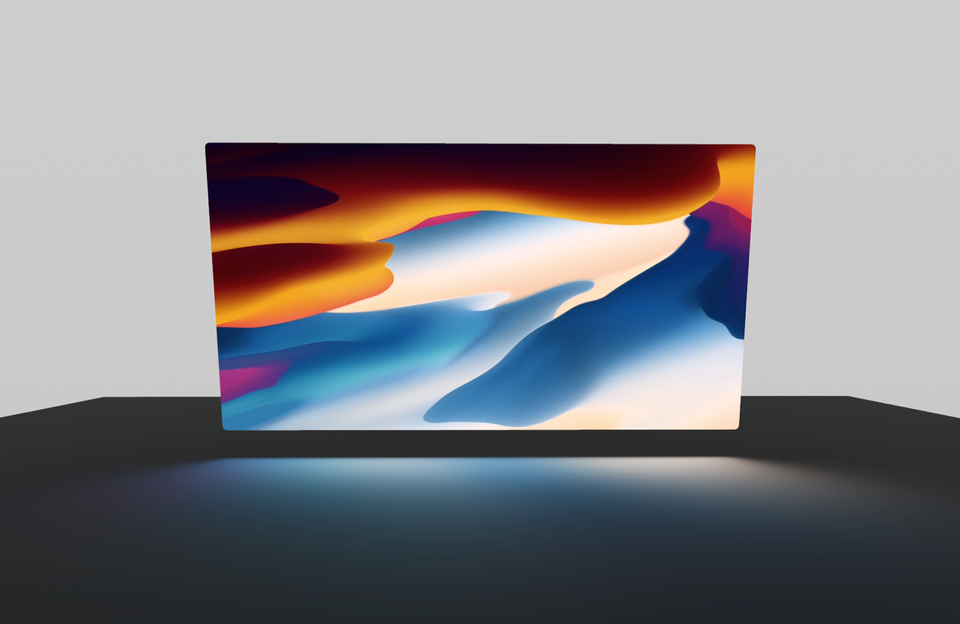

In visionOS, there's something about docking videos in immersive environments (anchoring them like a screen in a movie theater) that makes them feel much more present and impressive. Even though this has being limited to a select few apps and the system has the control over the location, I've found that practically everyone is amazed by this simple trick when I demo the device. Apple seems to think the same, and this year in the session Enhance the immersion of media viewing in custom environments, the DockingRegionComponent is introduced. This new component allows any app to customize a docking region, thus achieving the same or better effect.

Content description: A video of the Destination Video player in an immersive space in visionOS.

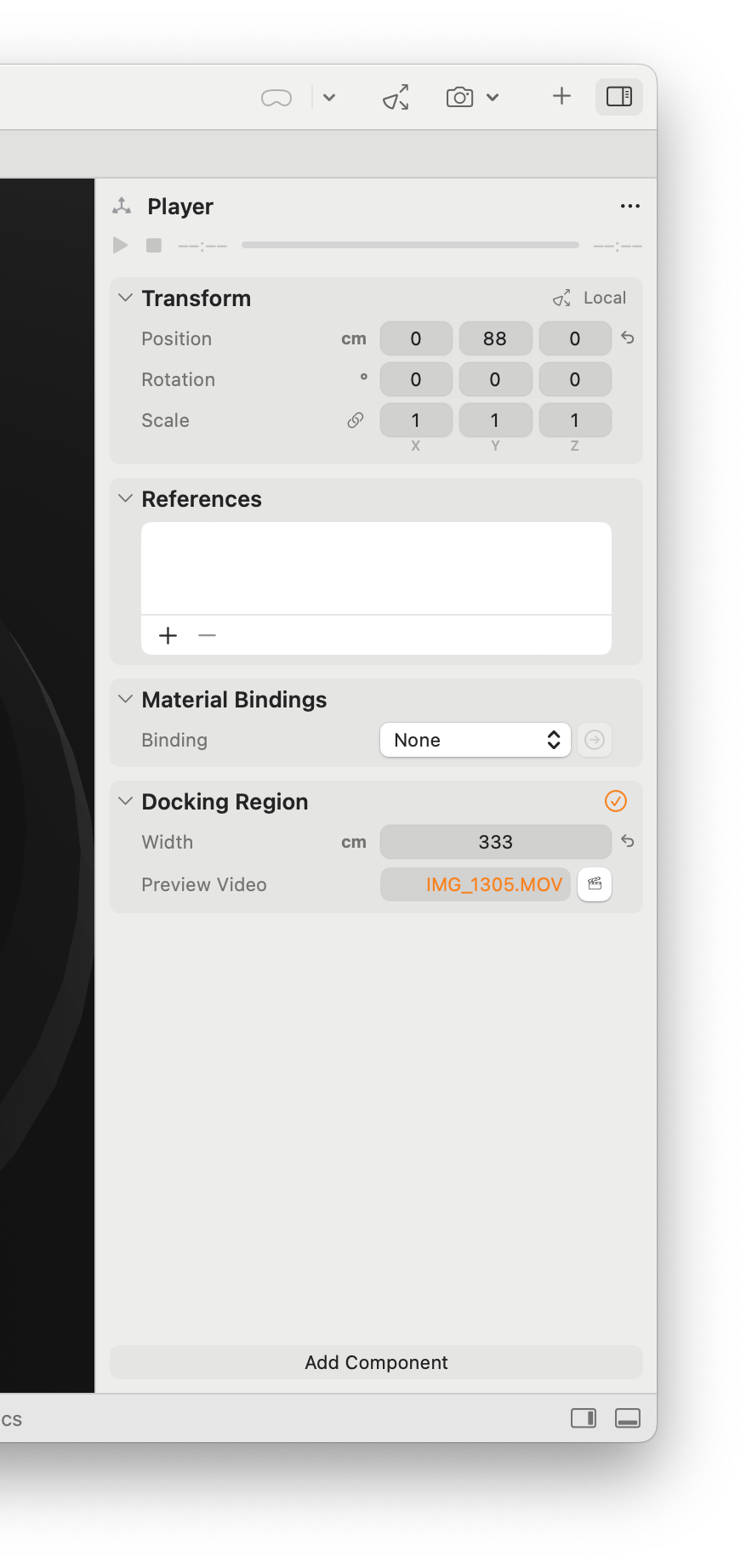

Docking Region Component

The width property defines the maximum size for media playback using a 2.4:1 aspect ratio

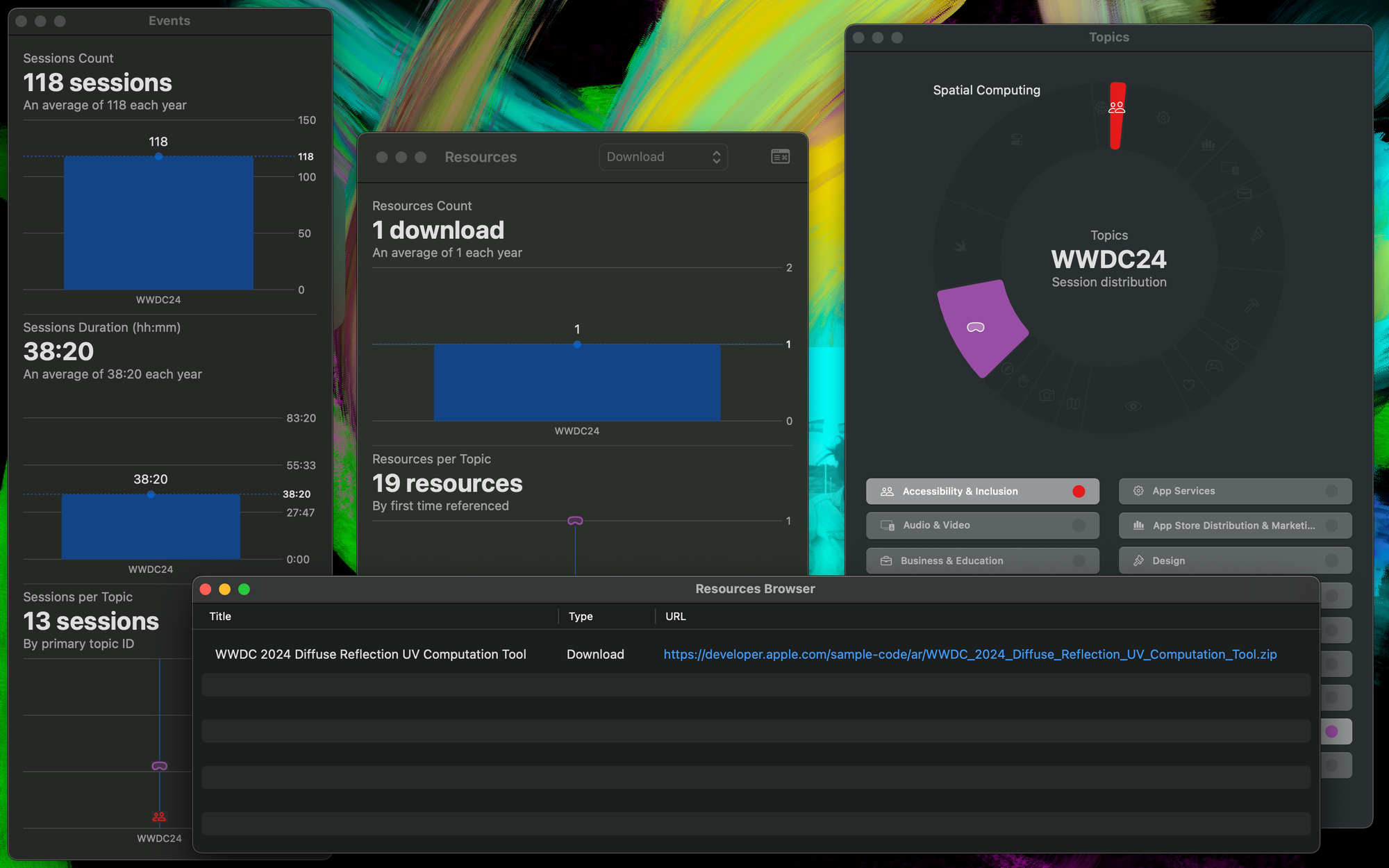

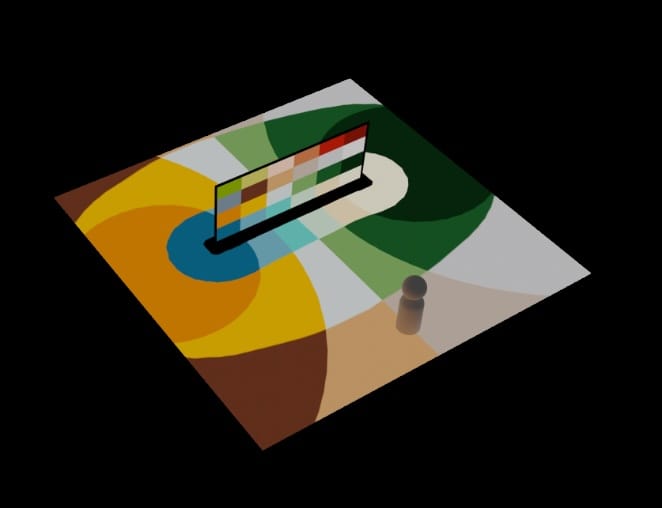

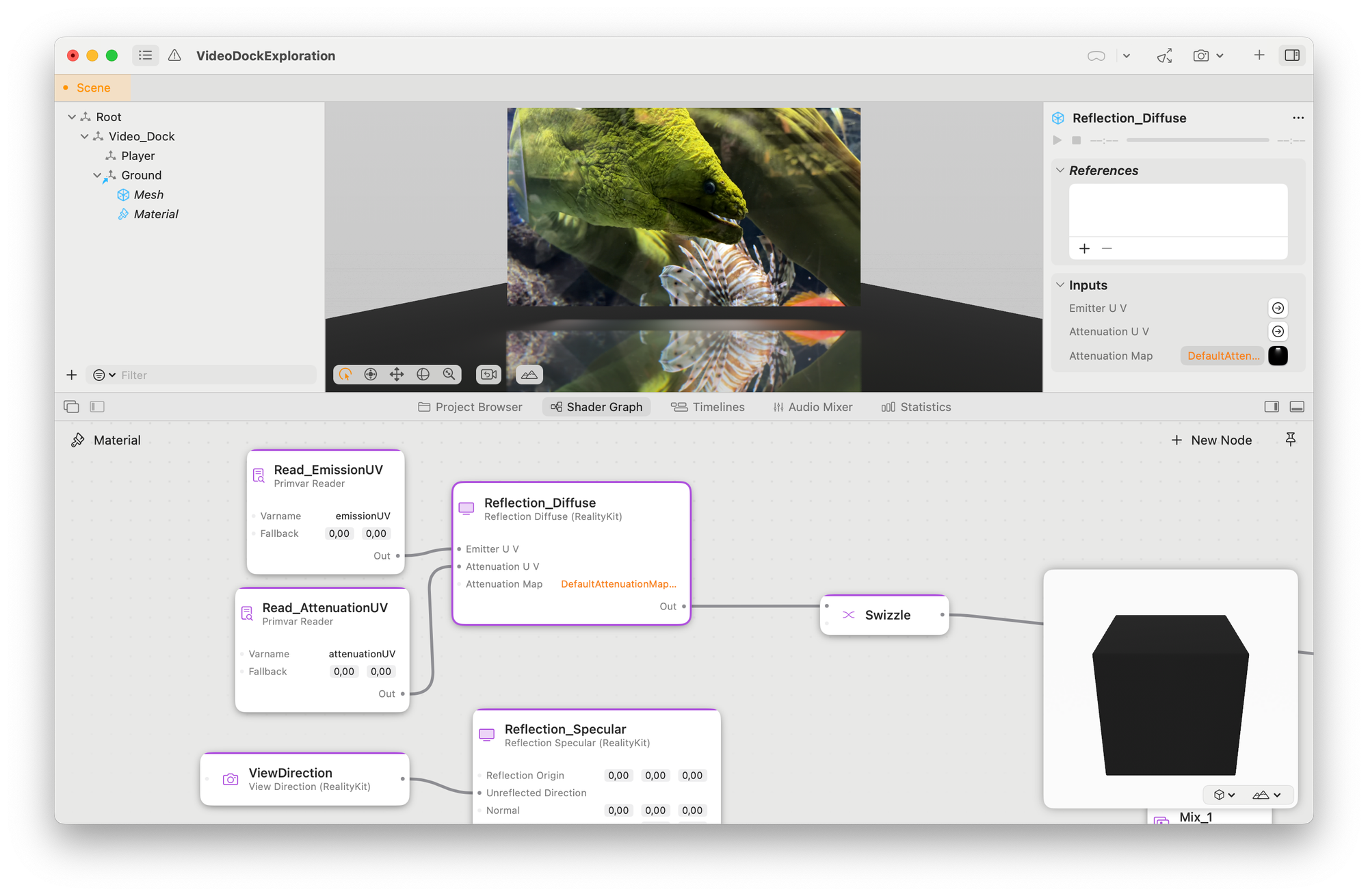

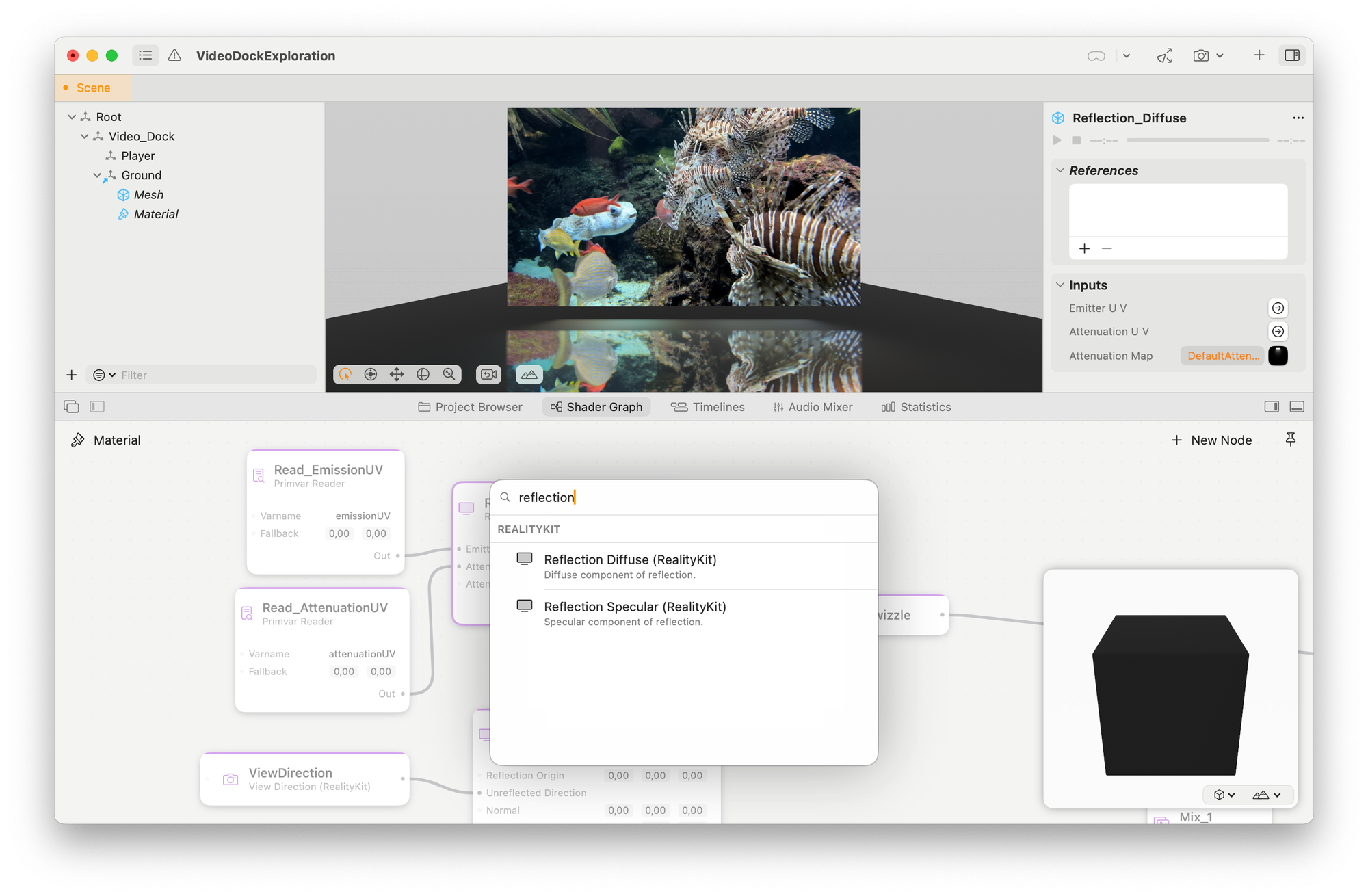

One of the things that caught my interest was the attention to detail in media reflections. Thinking of reflections as a viewing affordance for media is a lovely idea; it reminds me of the early Keynote styles. The session includes all necessary details on how to set it up in Reality Composer Pro, but maybe an slightly unclear part is that the reflection texture mapping itself necessitates a well-defined set of UVs on the model, particularly when looking for extra customized geometry for the surroundings that will reflect the screen.

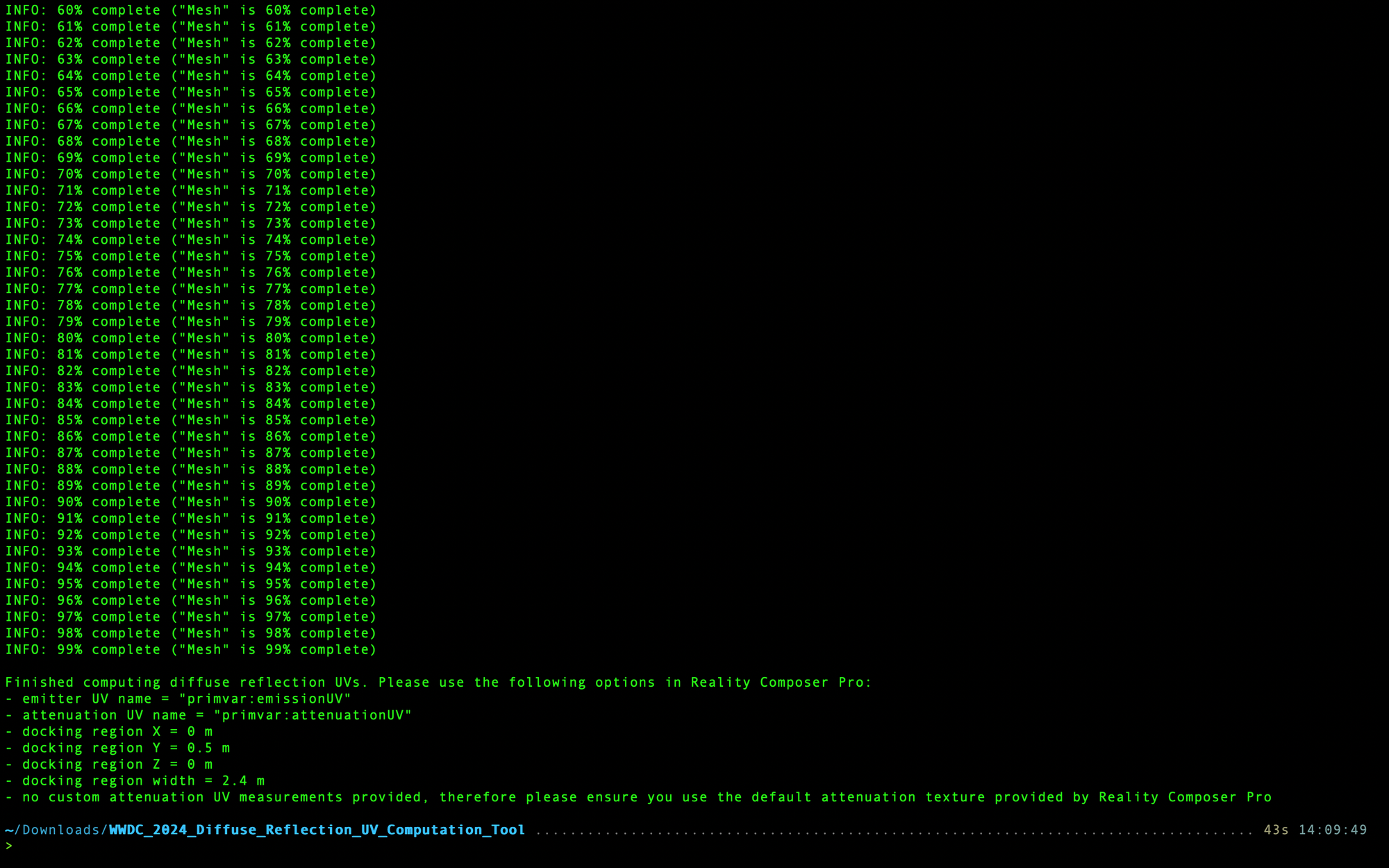

Conveniently enough, if you look carefully at the resources, you can find the only download of this year, a Python script (that works on top of the existing USD Python library) with an algorithm that computes emitter and attenuation UVs.

Besides that, it comes with a great PDF that explains the process and basically lists the needs and possible solutions for something that I can see becoming part of RCP itself in the future.

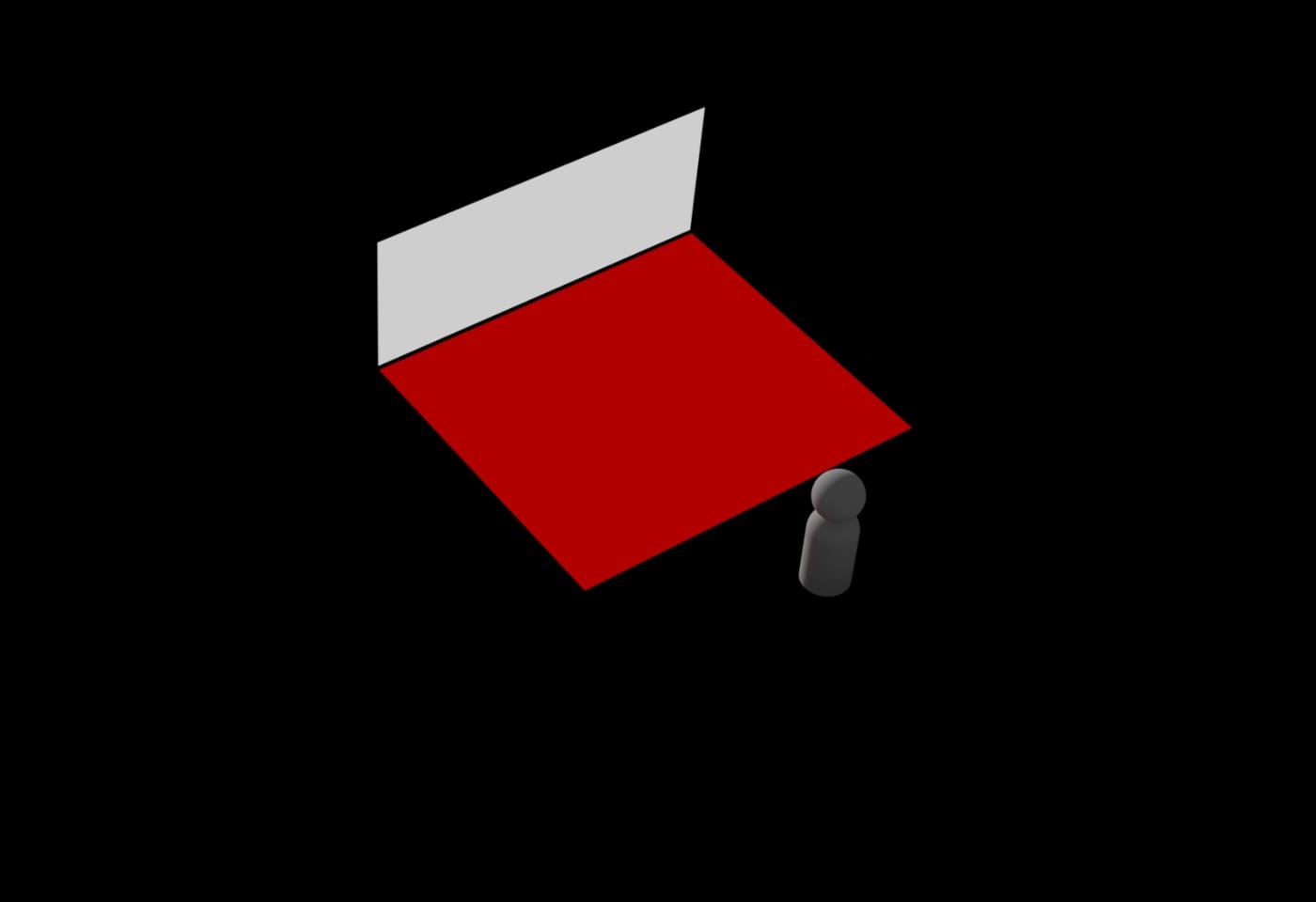

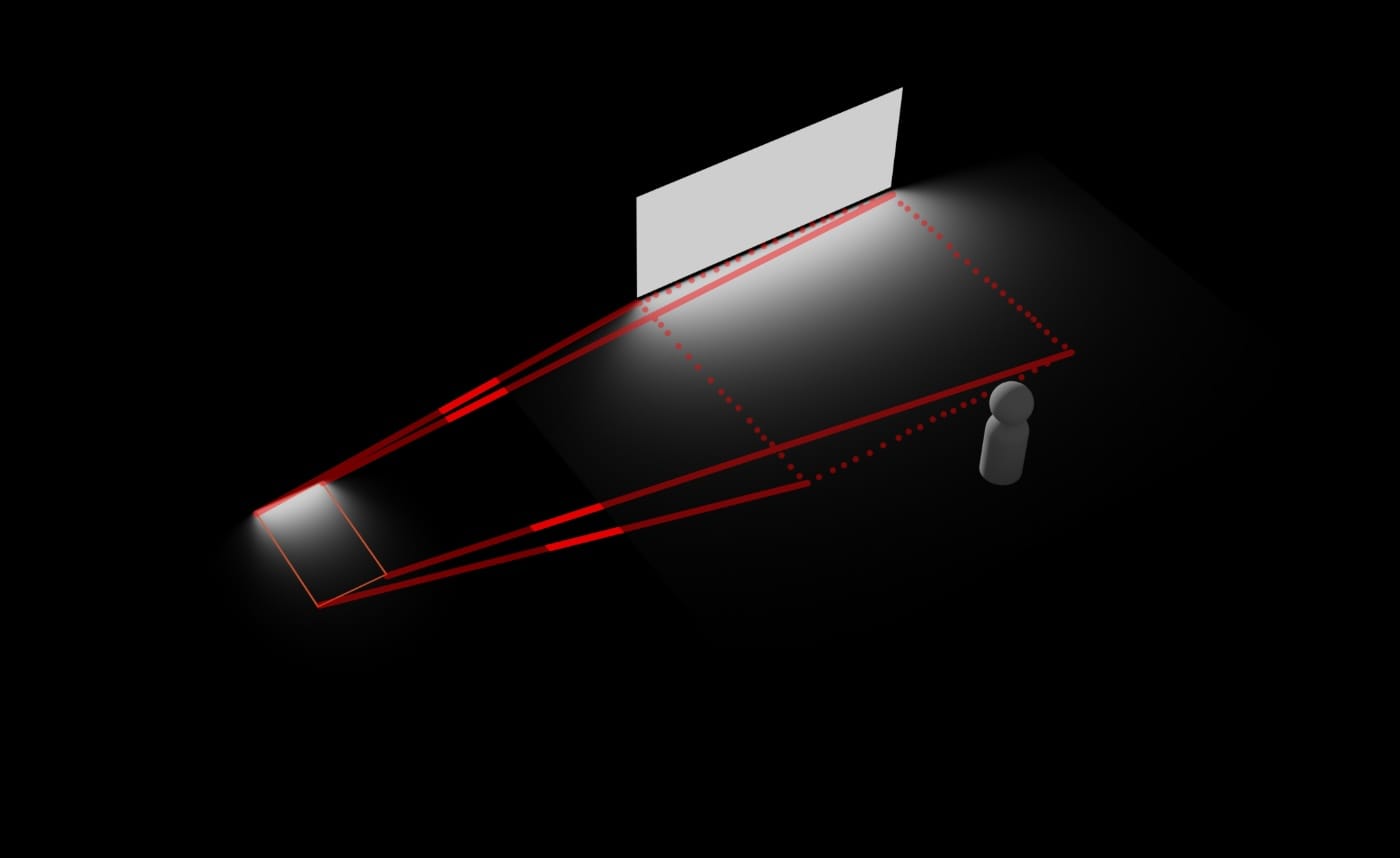

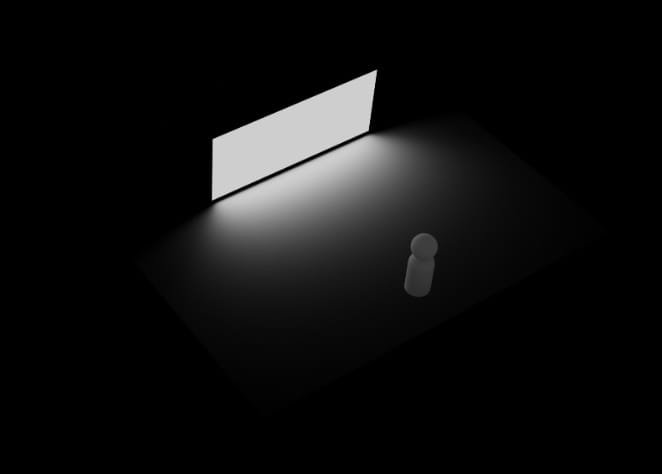

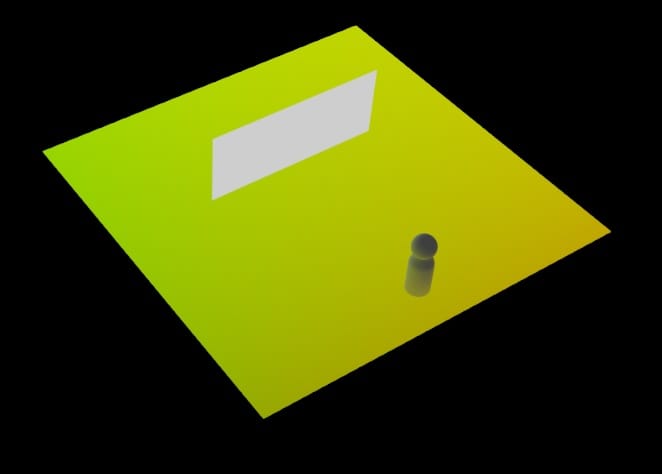

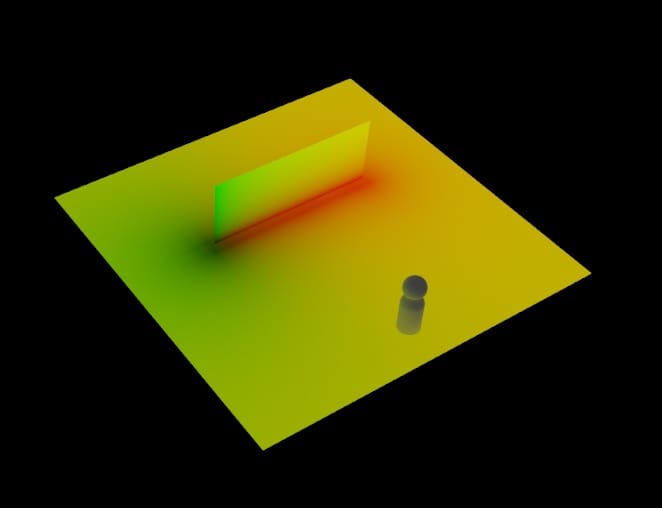

Images used in the documentation pdf companion, describing step by step what the algorithm does to the model UVs to make it work with the .exr attenuation texture.

Here's an overview of how it works (a combination of RCP and the terminal):

python3 computeDiffuseReflectionUVs.py <input.usd> -o <output.usd> -p / -r true

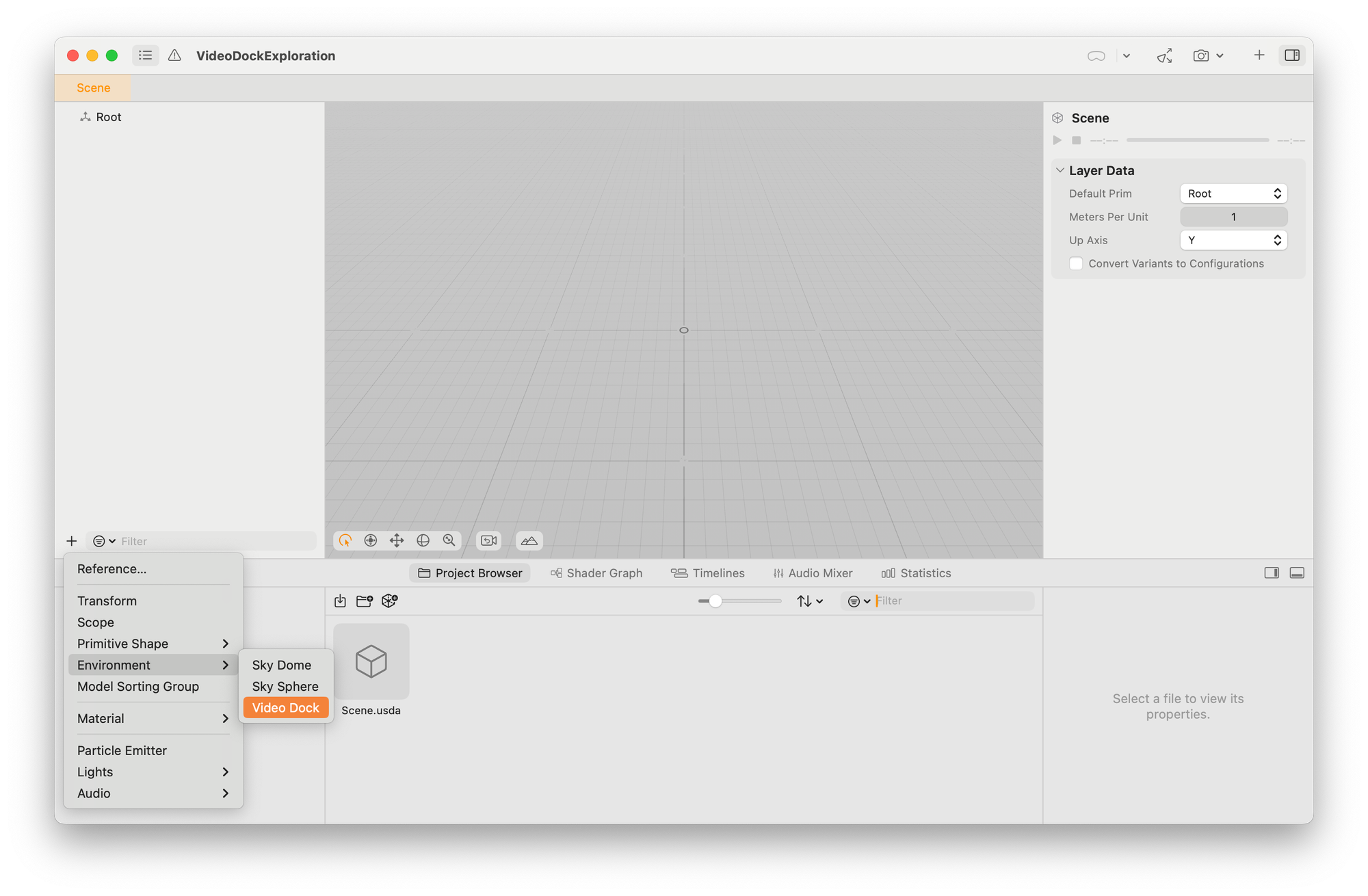

Screenshots of the setup and different steps required to obtain from a custom .usd a representation of an environment in which media will bounce

Resulting in an unintended donut house of mirrors

https://developer.apple.com/sample-code/ar/WWDC_2024_Diffuse_Reflection_UV_Computation_Tool.zip