The Aesthetics of Immersion

Explore

On February 27th, 2025, from 10:00 AM to 5:00 PM (PST), Apple ran a hybrid session at Apple Developer Center Cupertino. At that time, Roxana and I were in Shanghai (1:00 AM to 8:00 AM (CST)) preparing the last details before our first game demonstration during Let's Vision, but given that Apple's session was not going to be recorded, you have to do what you have to do…

Photo sequence of the preparation of drip coffee available in the hotel room.

The event was titled "Create interactive stories for visionOS" and contained three exceptional talks:

- Transform your ideas for Apple Vision Pro

- Design interactive stories

- Best Practices for Creating Immersive Content on Vision Pro

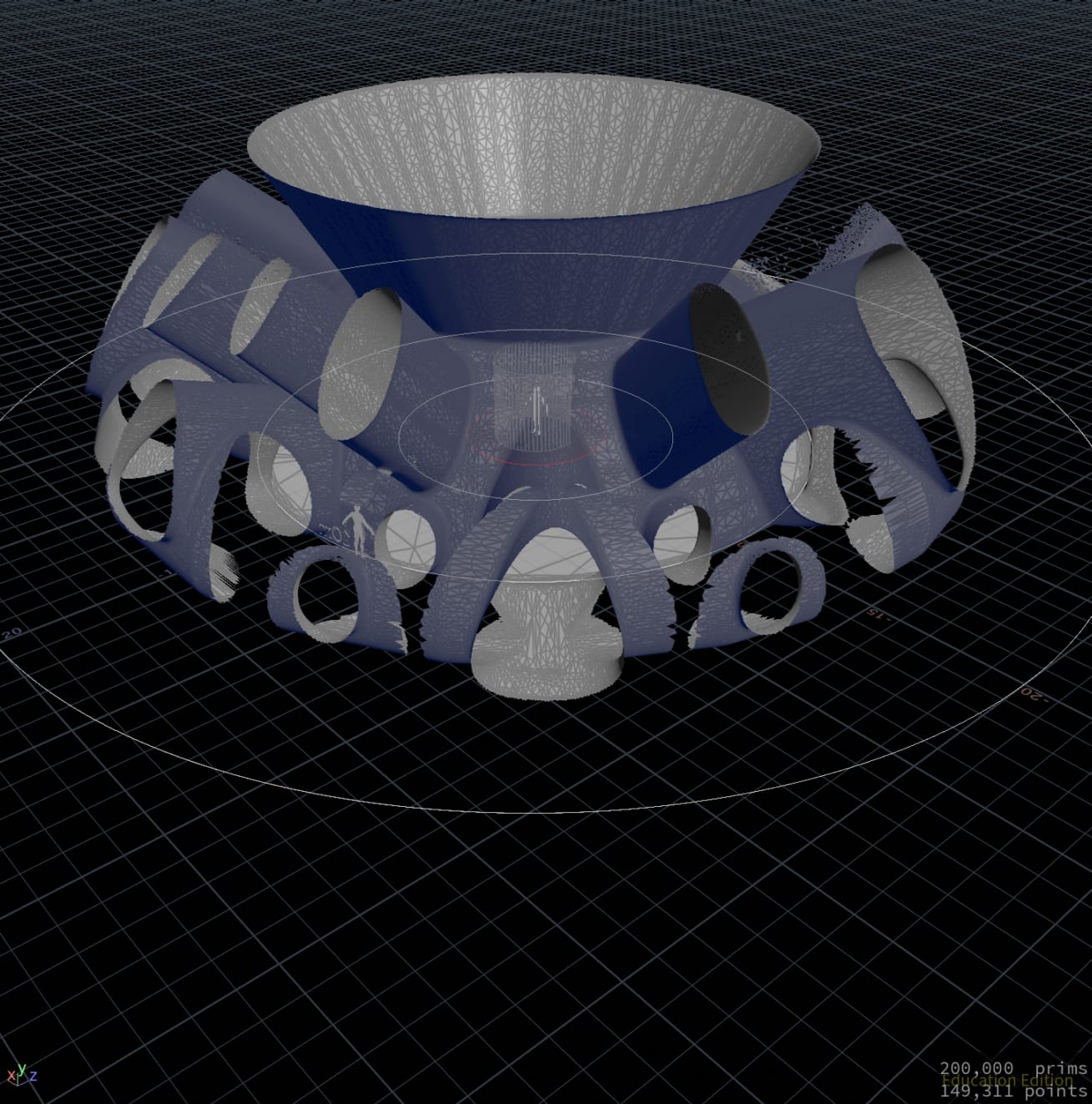

"Best Practices for Creating Immersive Content on Vision Pro" hit us directly as we struggled for a while making our own assets and obtaining that balance of "Impressive! How did you do this?! I've never seen anything like this before!" and "optimal use of resources" (go figure). From the initial slides talking about the use of procedural tools, we immediately got a strong validation of some of our early decisions, such as transitioning from Blender to Houdini (mentioned in our presentation at the AI Hacker House Shanghai). Apple's talk included concrete examples, while it did not go into detail about implementation, yet it was still extremely helpful.

Adopt

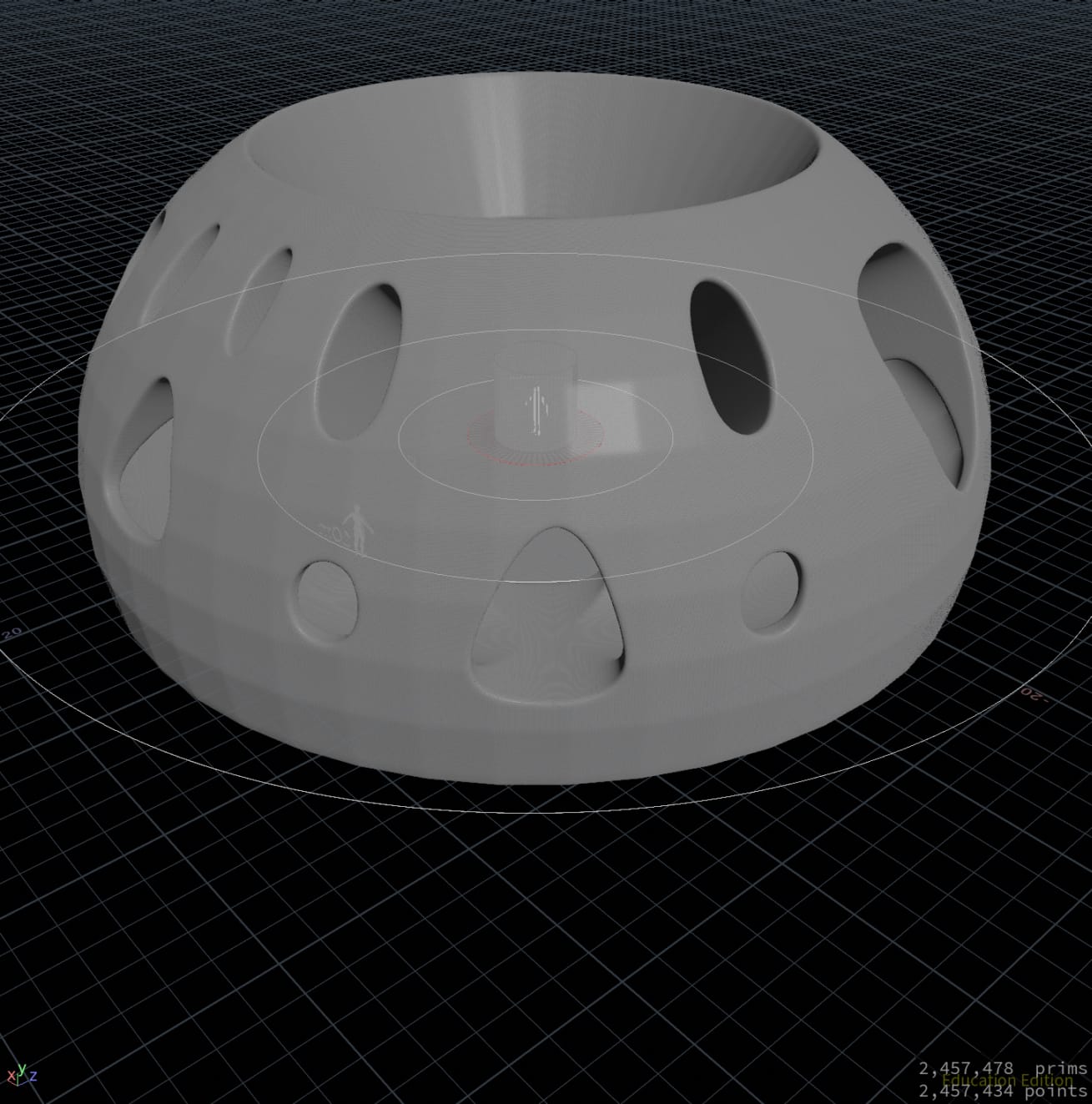

So we have some great-looking content, but to create genuine presence, a pre-rendered image isn't enough.

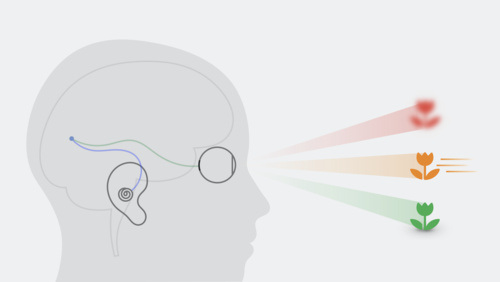

Creating presence in a virtual environment requires a surprising amount of combined techniques and different fields of knowledge to get it right, even if we are just focusing on visuals. The main reason for this complexity is that perception demands really specific cues to believe in the existence of a virtual world. And even if one can get close to an acceptable illusion, everything has to be achieved within budget constraints (aka, keep the frame rate high and maintain the temperature low).

So, one must significantly increase the control over art direction and deep dive into the understanding of a variety of tools with their nuances.

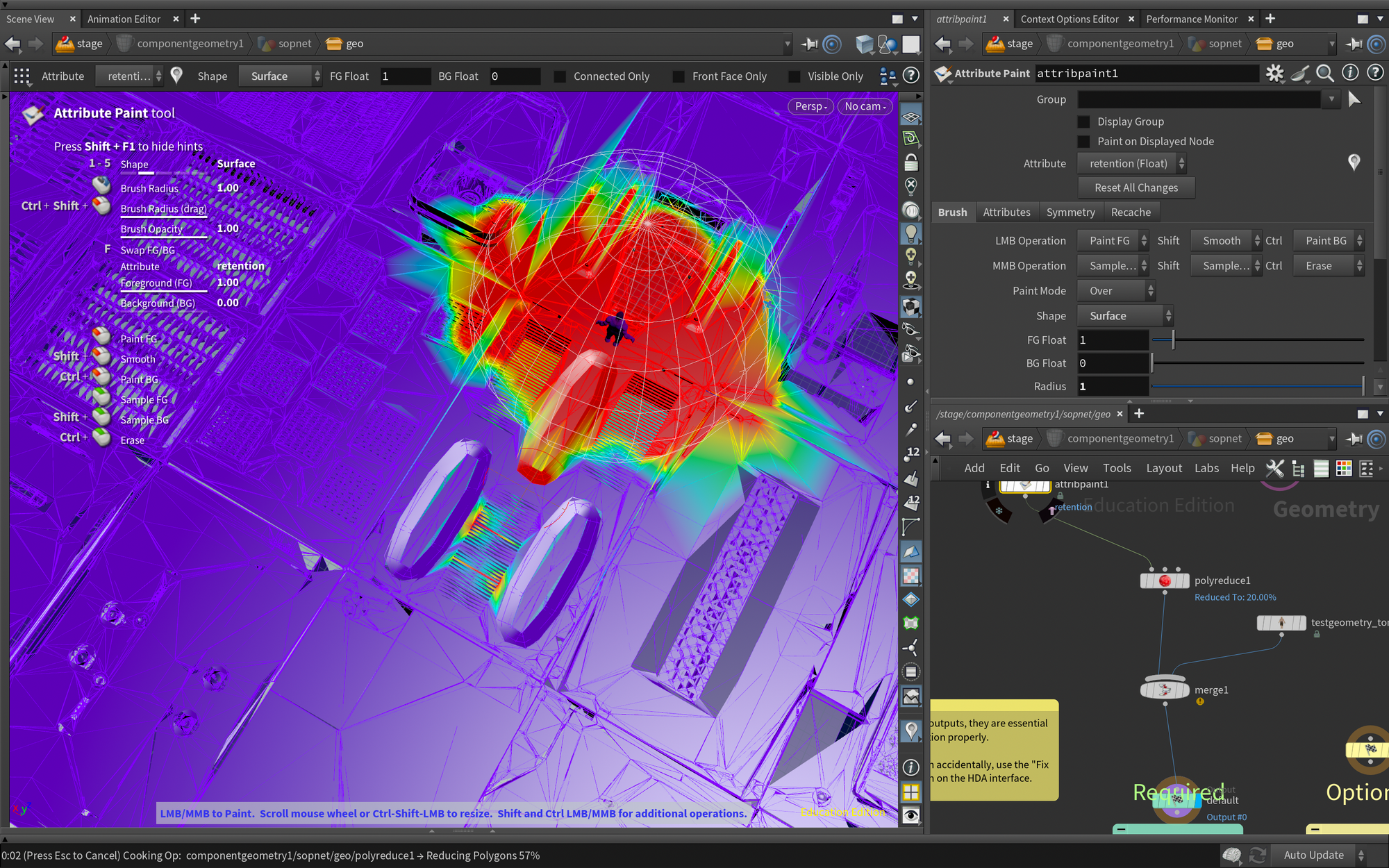

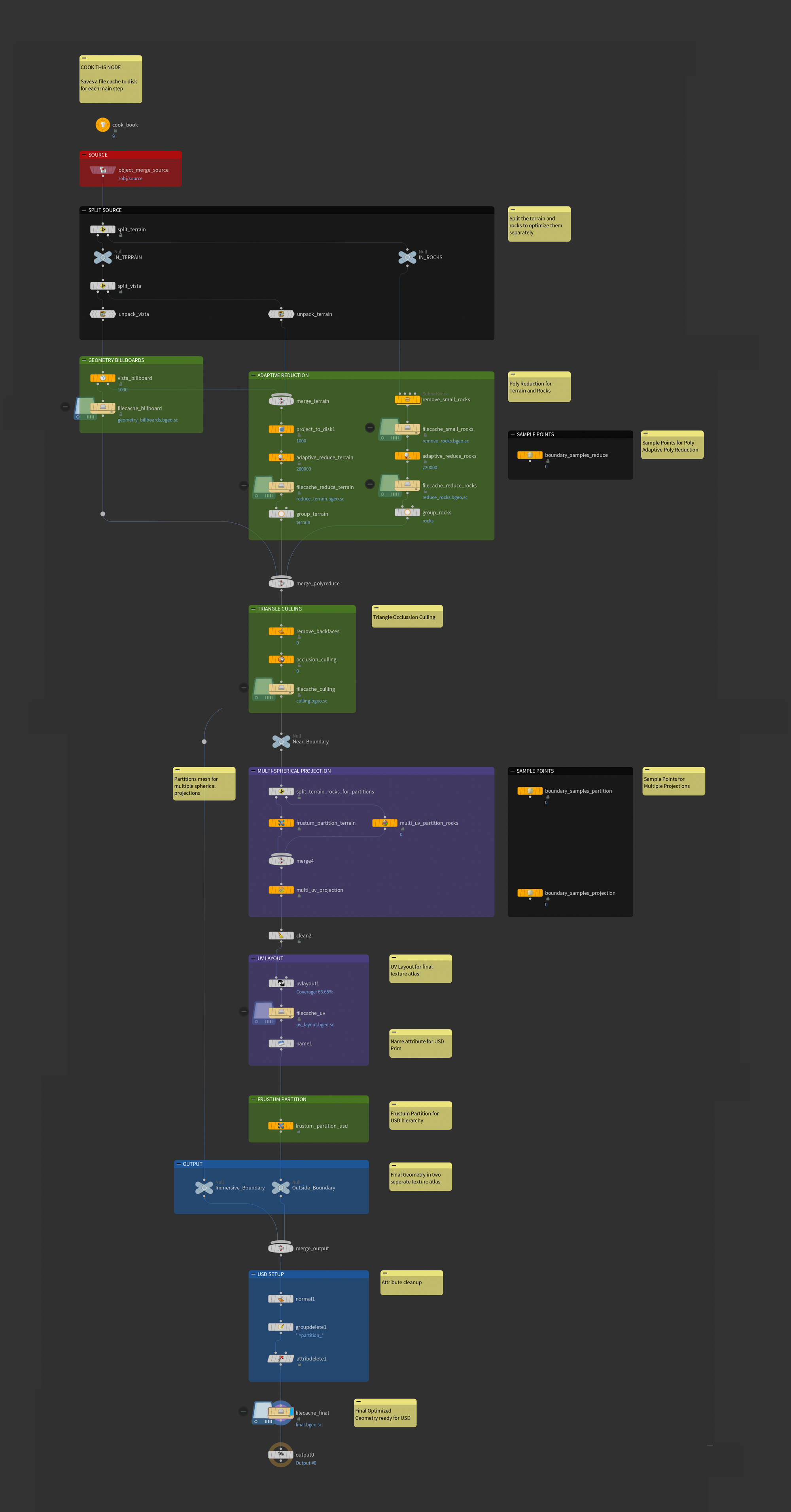

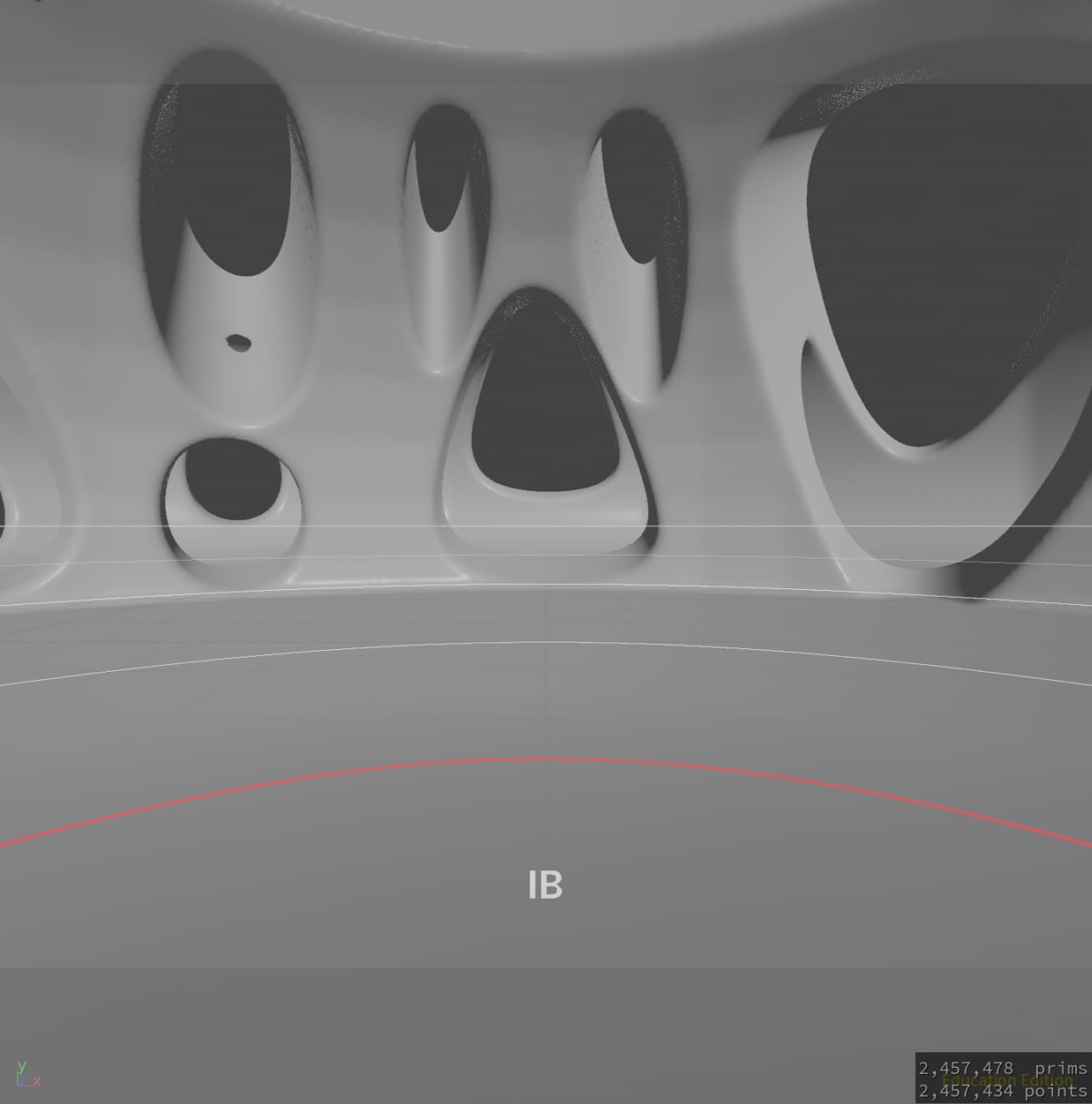

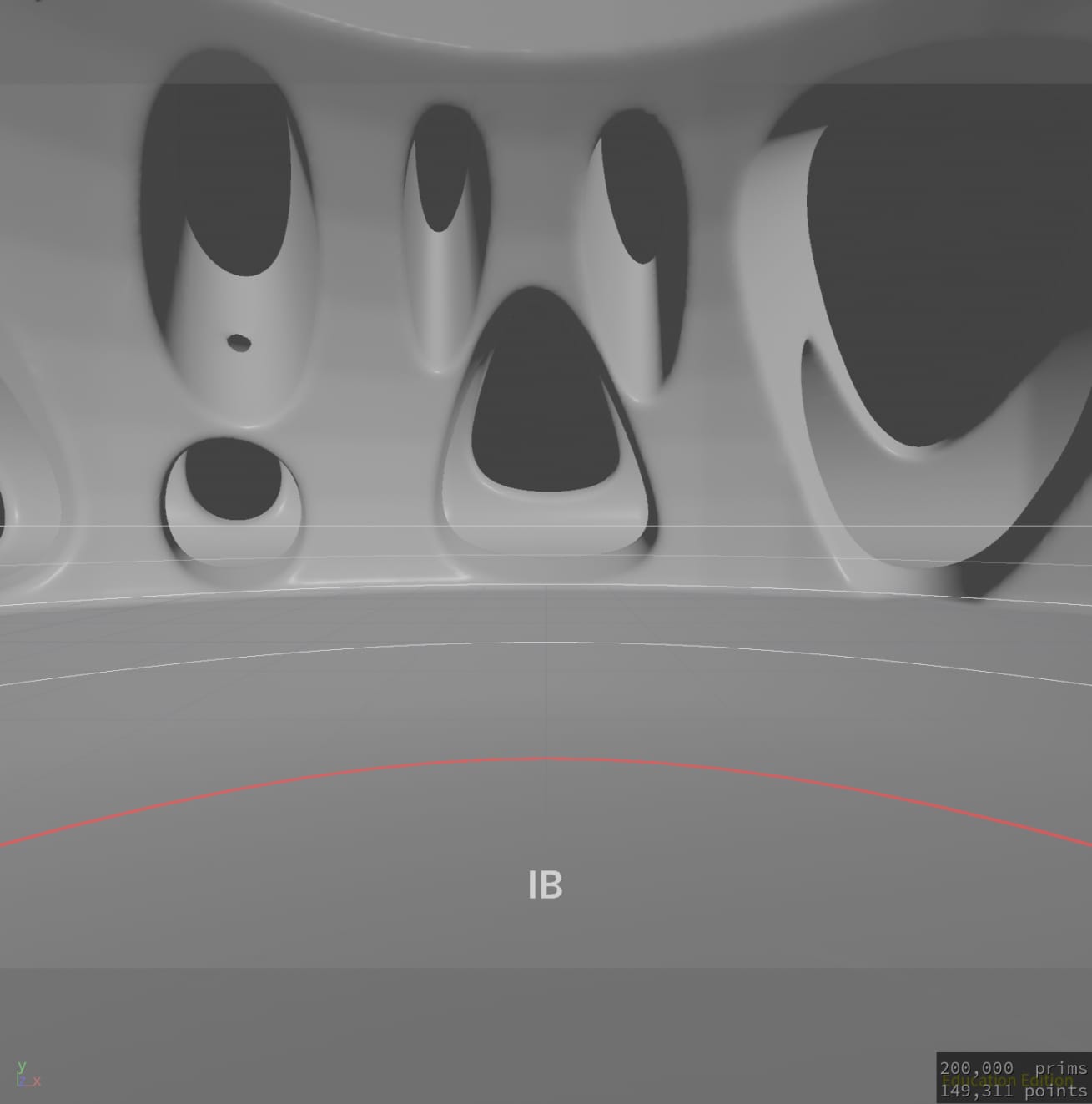

After the talk, we worked on creating our optimization pipeline, trying to guess how to get a result like the examples shown, and pretty fast we got some results by just having clear steps and concepts to refer to (e.g., adaptative reduction).

This type of procedural pipeline streamlines complex tasks and enables processes that would be nearly impossible to achieve manually (such as analyzing contours for preservation while efficiently reducing flat areas). Side note: this is why we think Houdini should be emphasized more as a necessary tool for this type of work.

So we got as close as we could understand. Our tools worked, but we had this dream of getting a sneak peek at the literal tools Apple uses on a daily basis. To our surprise, that's exactly what we experienced at WWDC25 with the Optimize your custom environments for visionOS session. A great 32-minute sequel, with even more deeply technical knowledge and this time explicitly Houdini-oriented.

Additionally, a comprehensive set of tools was released: the immersive environment optimization toolkit. This collection of sophisticated HDAs, when used in the correct sequence, can significantly enhance performance for various Apple Vision Pro-specific scenarios.

So, naturally, we embraced the use of those tools, resulting in modest gains of up to ~90% less resource usage 💥.

Learn

Geometry creates presence.

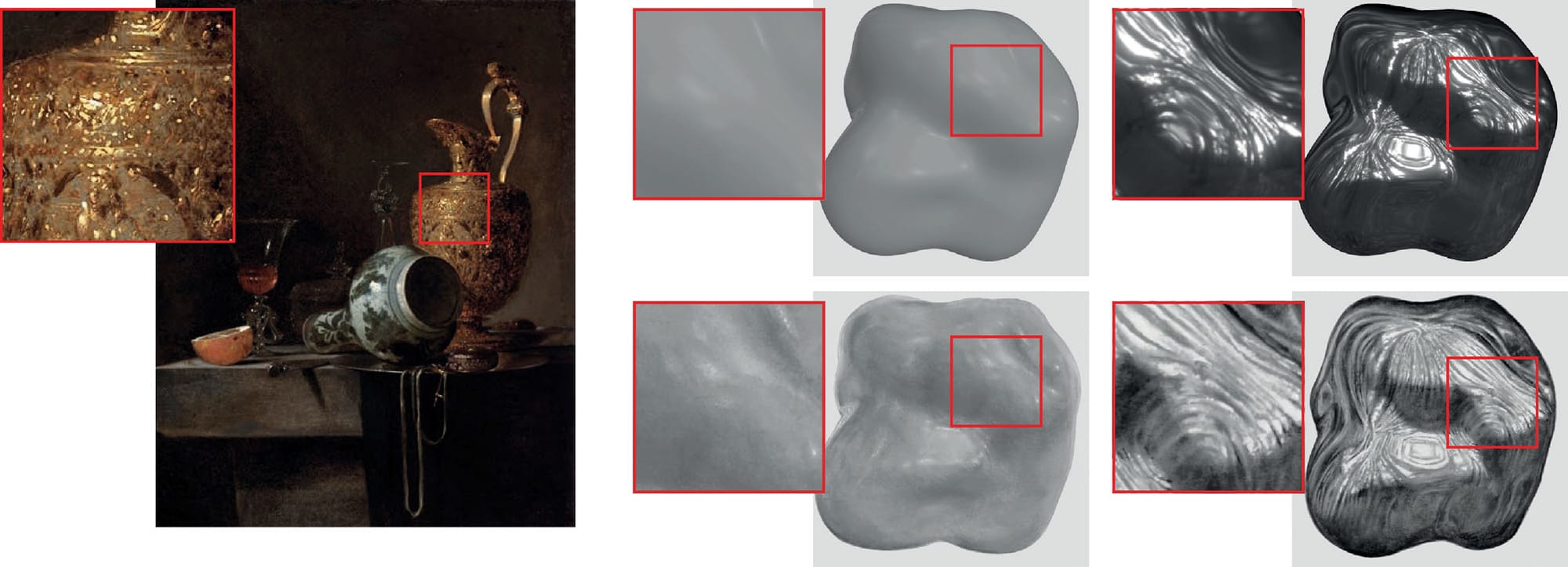

When I was in art school, I had to learn to make effects like sfumato, cangiante, chiaroscuro, unione, and a bunch of other tricks for representing materials, atmosphere, and so on… Then there was the glazing technique. This technique involves applying a very thin layer of oil paint diluted with turpentine over an already dry base over and over (and over and over). This allows the light to pass through the upper layers and reflect off the ones beneath, increasing the effect of depth, volume, and subtle gradients. For applying it successfully, one has to do no more than one or two layers per day (because oil painting requires being exposed to air to dry). Consider this: waking up, going to the studio, applying a layer, stepping away, and waiting for the paint to dry throughout the day. And by the way, every single layer had to have a bit more oil, meaning the more you apply, the slower it dries, and in fact, you learn that you cannot rush this because, of course, we tried applying heat, and fun fact, oil painting melts. So that's also labor-intensive, but in a way related to first, knowing how and when to use disconnected techniques for a specific purpose, and second, having the patience to understand that it is a process that cannot be rushed.

Develop

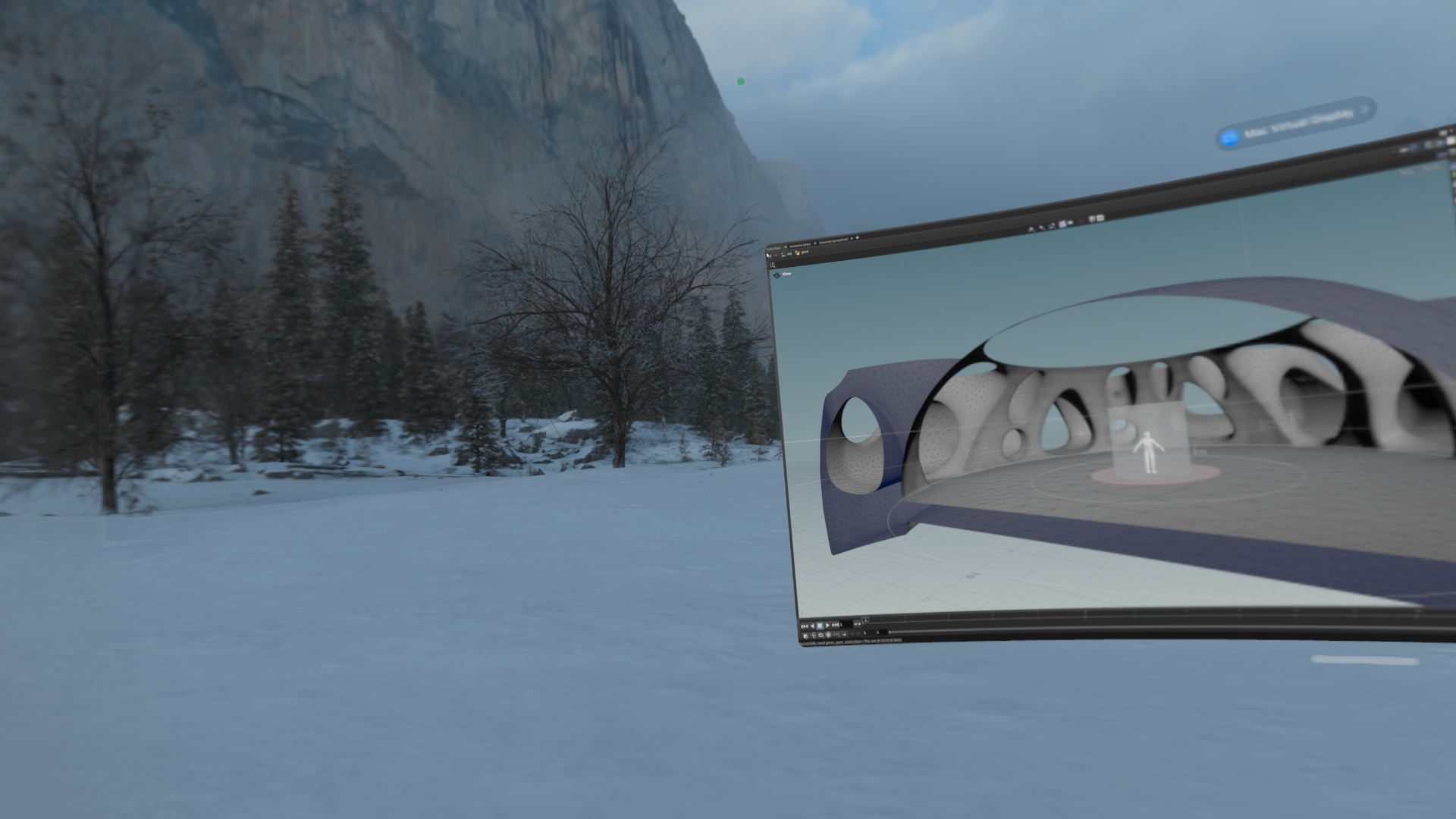

Anyways, I was the other day iterating on our game environment. I use Mac Mirror on the AVP, and also I like to work immersed in an environment at 50% or so. That day, I was in Yosemite. My attention was pointed to a specific part of the scene that I don't typically notice, which had a tree. I was so fixated that I was leaning my head to look at it continuously; it felt a bit like being art student again, copying a model from behind the easel, so the whole feeling mix of repetition, patience, and context was there when it struck me.

Immersive environments are a form of realistic painting.

Reflect

Of course, no wonder those who create this type of stuff call themselves technical artists.

Recognize

Yeah. Now that I was thinking of immersive environments as a piece of art in its own right, I started to fantasize about expositions in the MoMA, Guggenheim, and such, thinking about the logistics of how to display and maintain those in time. And then I read this post about Berlin as a capital of culture and art but lacking in XR, and the comments redoubled on the need for more immersive spaces in the cities to experience a new type of art medium. And then I realized that I was thinking of a physical museum as the only way to validate art in the immersive environment definition, which is going to a physical place to experiment with some virtual space, and how paradoxical is that? Right?

Is it necessary for the immersive piece to be physically located in order to have this value? Is this similar to a canvas frame that represents ownership? So I believe there is some friction between considering art and exhibiting it in an art space, and the space itself having to be physical.

Continue

At times, the complexity of accomplishing tasks in spatial computing can feel frustrating and overcomplicated. The need to learn concepts that may seem distant from the main concern can make the task even more daunting and risky. But the reality is that when confronted with certain types of work and targeting for a certain level of quality, it is always the same, no matter if you are woodworking, painting, or programming. Art is the expression of excellence attained through the careful application of skills and techniques. In other words, anything done with care and skill qualifies as art.

https://developer.apple.com/events/view/AN79Z25A7D/dashboard

| Study | Study Focus (Painting/VR/Both) | Primary Perceptual Cues Studied | Implementation Context | Key Findings |

|---|---|---|---|---|

| Pardo et al., 2018 | VR | Color, shading, texture, definition, geometry, chromatic aberration, pixelation | Virtual reality (VR) with 3D scanned objects, forward vs. deferred rendering | High visual fidelity in VR; realism linked to color, texture, and definition; deferred rendering enhances realism |

| Interrante et al., 2008 | VR | Egocentric distance, spatial perception, presence | Head-mounted display (HMD)-based VR, matched real and virtual rooms | Distance underestimation in VR; presence and environmental reliability affect perception |

| Loomis, 2002 | VR | Visual space perception, locomotion, navigation | Immersive VR (abstract only) | Distance underestimated in VR; VR allows decoupling of perceptual cues |

| Delanoy et al., 2021 | Both | Material appearance (contrast, highlights, glossiness) | Paintings vs. renderings, web-based 2D images | Material properties perceived similarly in both media; key image features drive perception |

| IJsselsteijn et al., 2008 | VR | Movement parallax, blur, occlusion | Projected photorealistic scenes, virtual video | Cues enhance depth perception; parallax most effective |

| Hornsey and Hibbard, 2021 | VR | Binocular disparity, linear perspective, texture, scene clutter | VR with Oculus Rift, trisection and size constancy tasks | Linear perspective and binocular cues improve distance accuracy and precision |

| de Dinechin et al., 2021 | VR | View-dependent effects (specular highlights), presence | Image-based rendering, multi-camera VR | View-dependent effects enhance realism and presence |

| Riecke et al., 2006 | VR | Vection, presence, ecological relevance | Curved projection screen, naturalistic 3D scenes | Cognitive factors (presence, ecological relevance) enhance vection |

| Fischnaller, 2017 | Both (focus on VR) | Perspective, multisensory engagement | Immersive VR exploration of paintings, haptics | Perspective and multisensory engagement findings reported |

| Fulvio and Rokers, 2017 | VR | Motion parallax, visual feedback, head jitter | VR with Oculus Rift DK2, 3D motion tasks | Visual feedback essential for learning to use motion parallax; head jitter improves 3D motion perception |