Guide to the latest in Spatial Computing (WWDC24)

Introduction

In a wearable, highly sensitive information access platform where consumers and customers frequently question what the actual use cases are, how do you maintain a balance between protecting privacy and enabling all of the potential use cases that developers come up with?

That is exactly what WWDC24 is about. With an apparent light of updates visionOS 2.0 release, beneath there is an overwhelming amount of new features for developers, a compilation of answers to all of the feedback and requests provided during the first year.

some Numbers

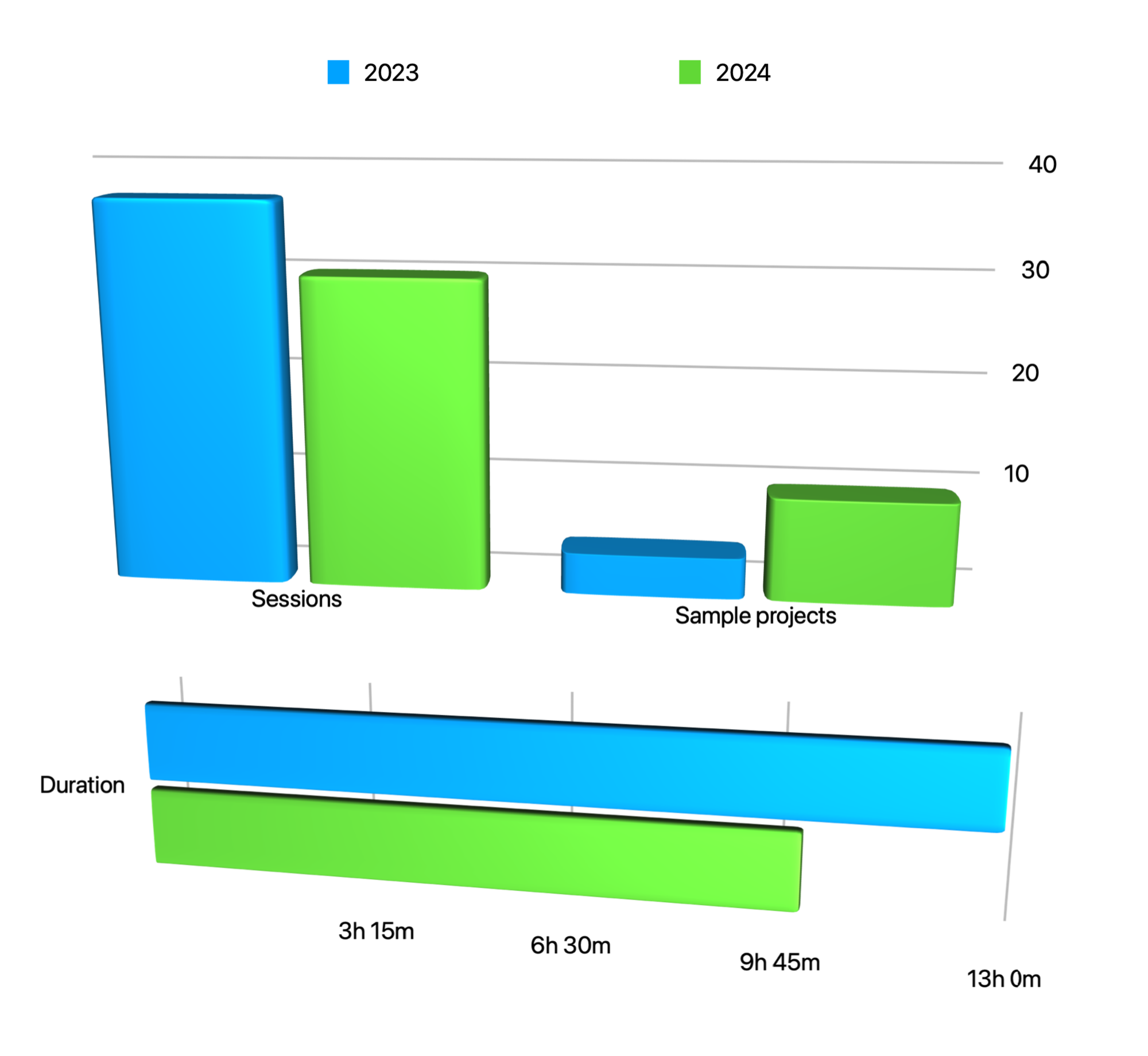

Metric differences between editions don't tell the whole story, but they do help to see where the work is being focused.

| Edition | Sessions | Duration | Sample projects |

|---|---|---|---|

| 2023 | 37 | 12:49:55 | 4 |

| 2024 | 30 | 10:06:28 | 10 |

Keep in mind that some sample projects were added right before WWDC24. All of the original ones have been updated, and some of them now need visionOS 2.0 as a minimum target.

TL;DR: A lot of energy went into documentation and practical resources.

some Words

Here are the first words of the names of this year's sessions, arranged by how often they appear.

- What’s new (x6)

- Create (x3)

- Explore (x3)

- Build (x2)

- Design (x2)

- Discover (x2)

- Enhance (x2)

- Meet (x2)

- Optimize (x2)

- Break into

- Bring

- Compose

- Customize

- Dive deep

- Get started

- Introducing

- Migrate

- Render

- Work with

Titles continue to be action-oriented, with marketing touches of innovation and creativity. There is also a strong emphasis on onboarding and developer engagement, with less focus on foundational presentation; Apple wants developers to not just use but deeply understand and leverage these new technologies.

Sessions

When categorizing the talks based on their content or purpose, it becomes evident that there is a significant focus on entertainment and gaming. Specifically, about one-third of the sessions are dedicated to this topic.

Entertainment

1/3 of the sessions dedicated to the topic

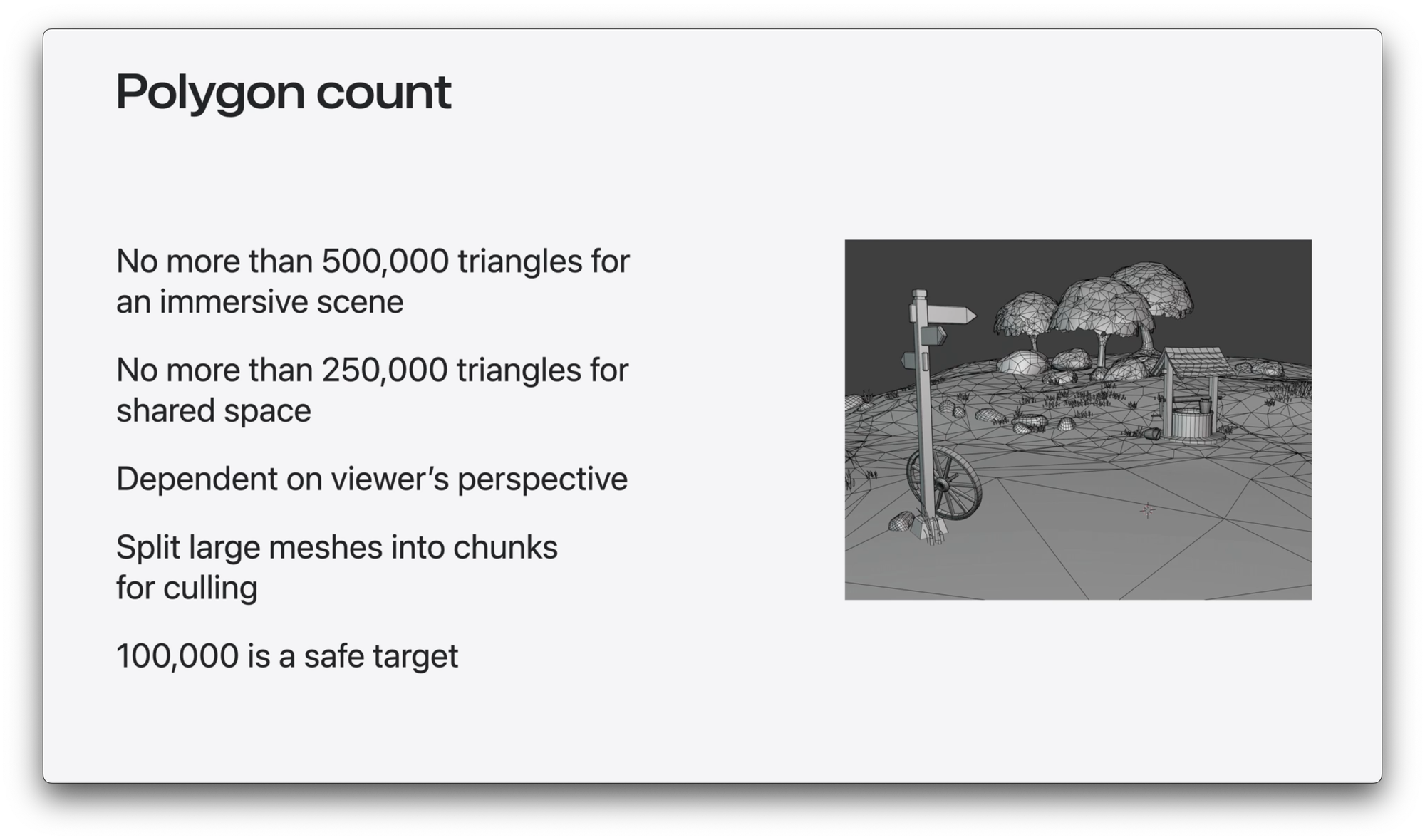

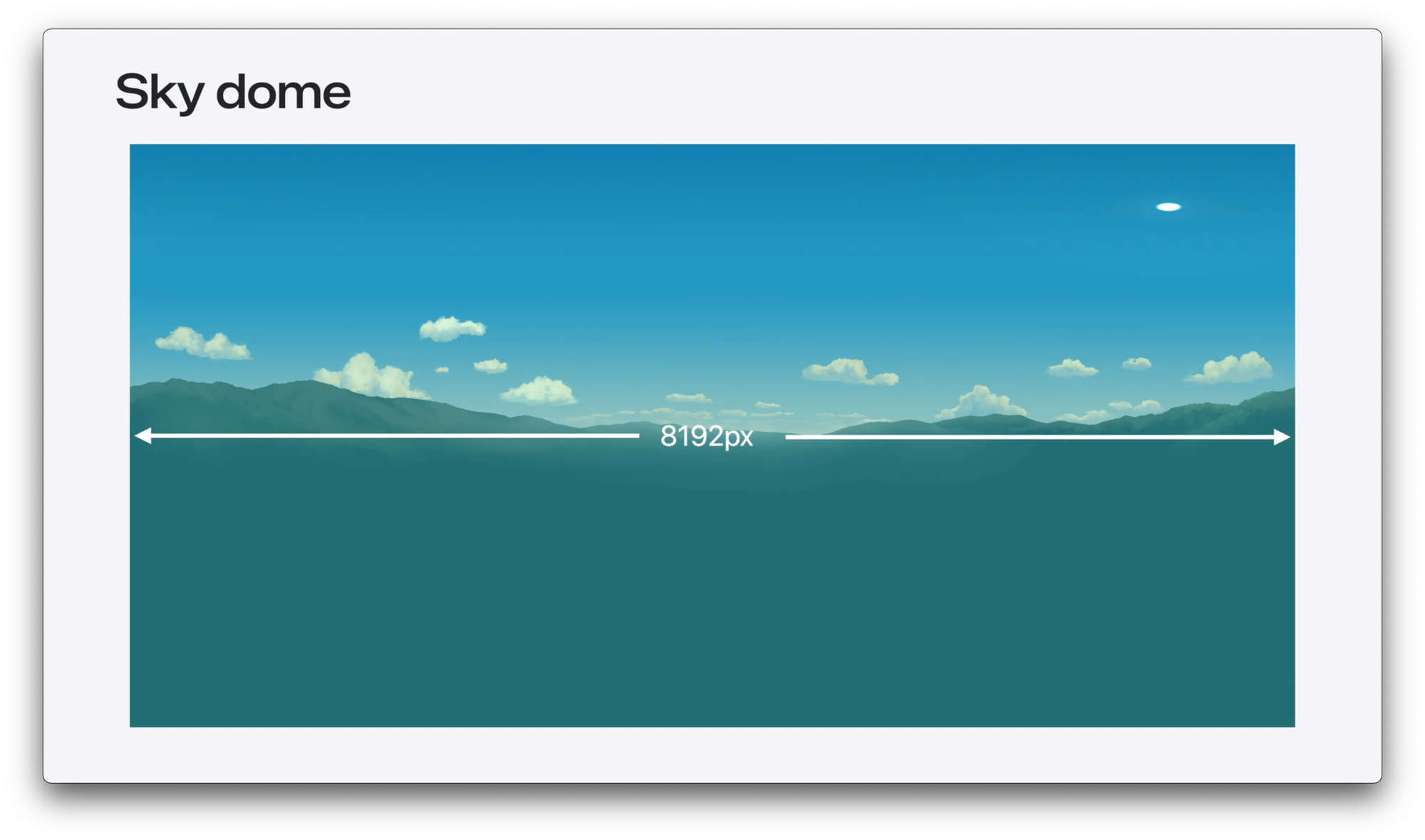

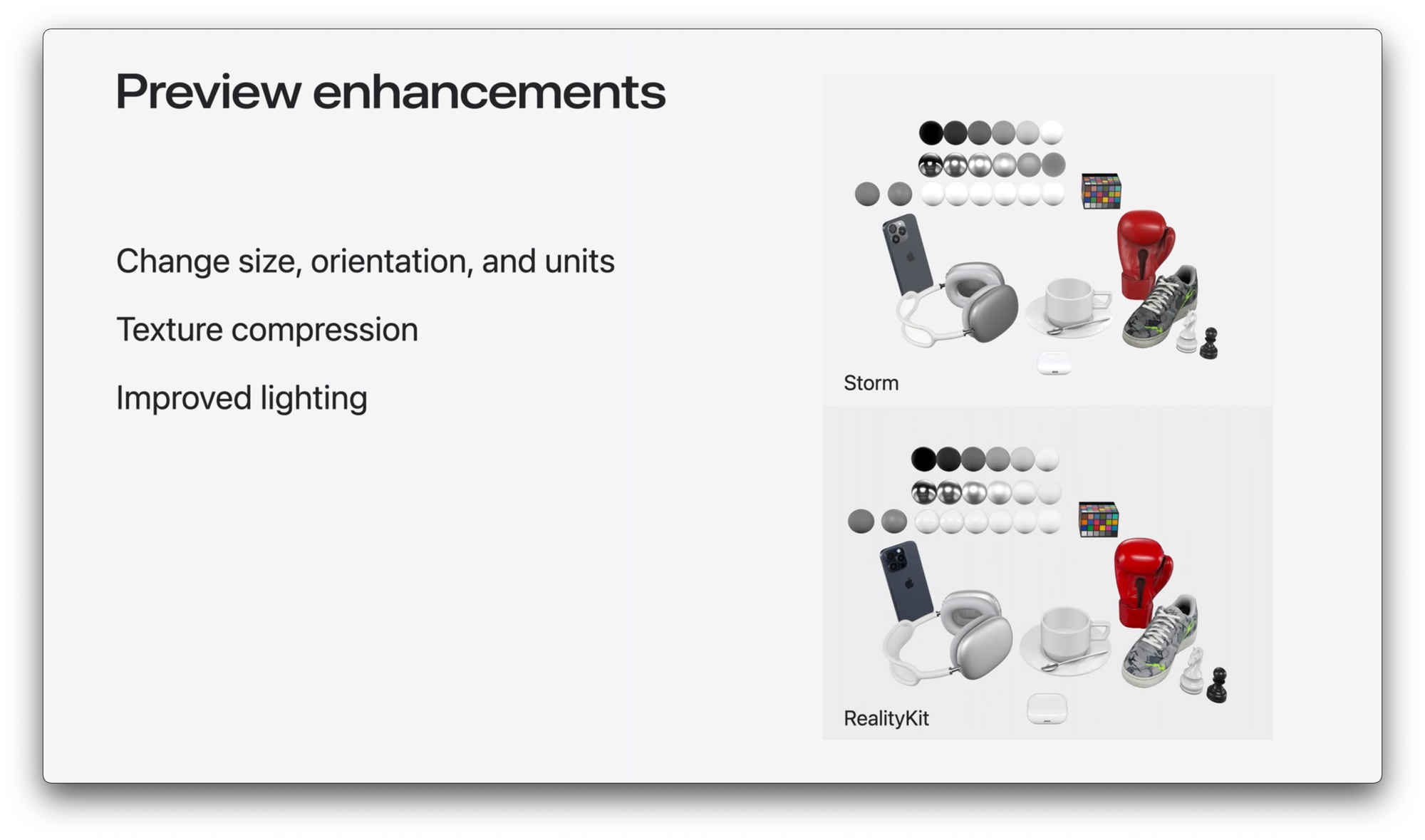

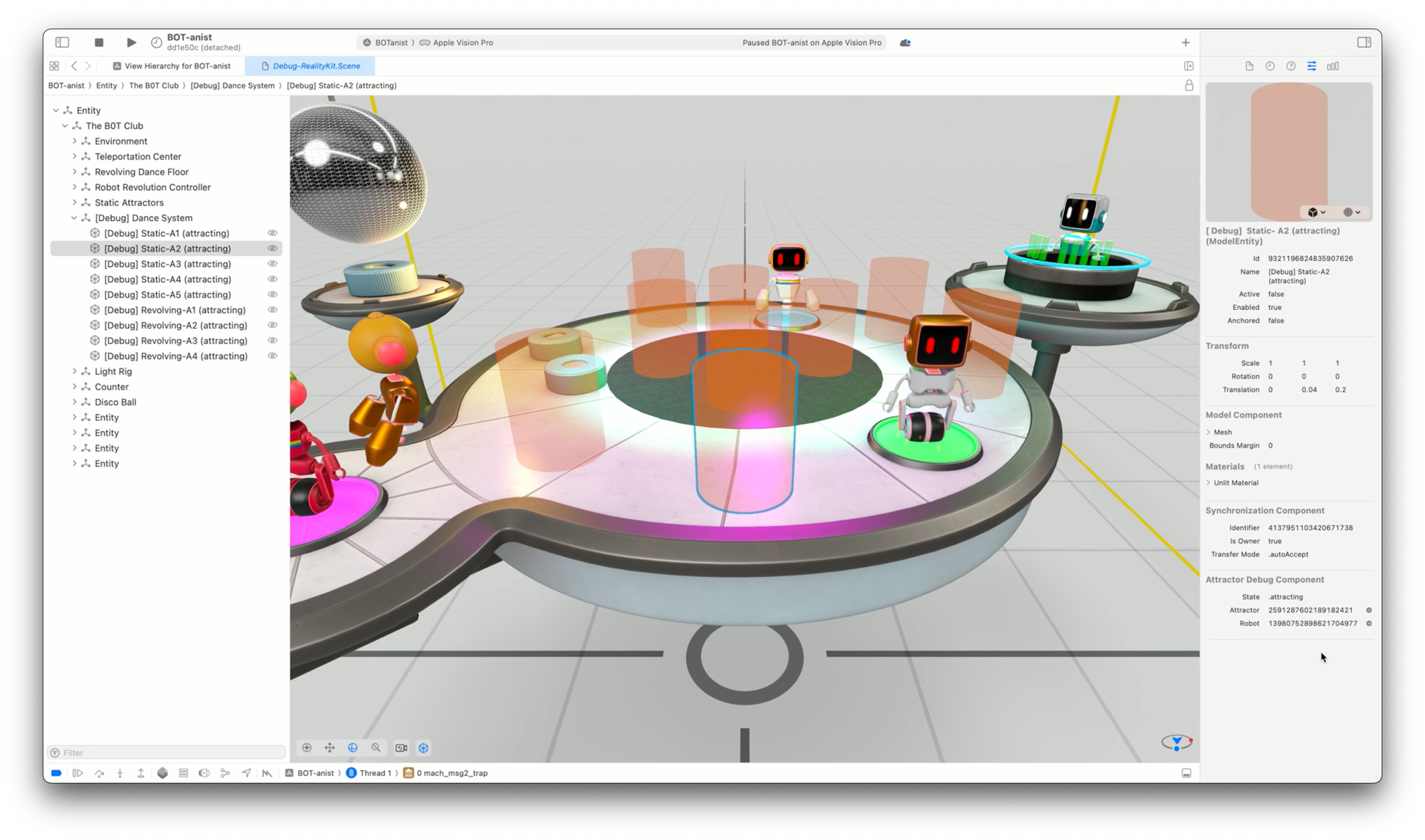

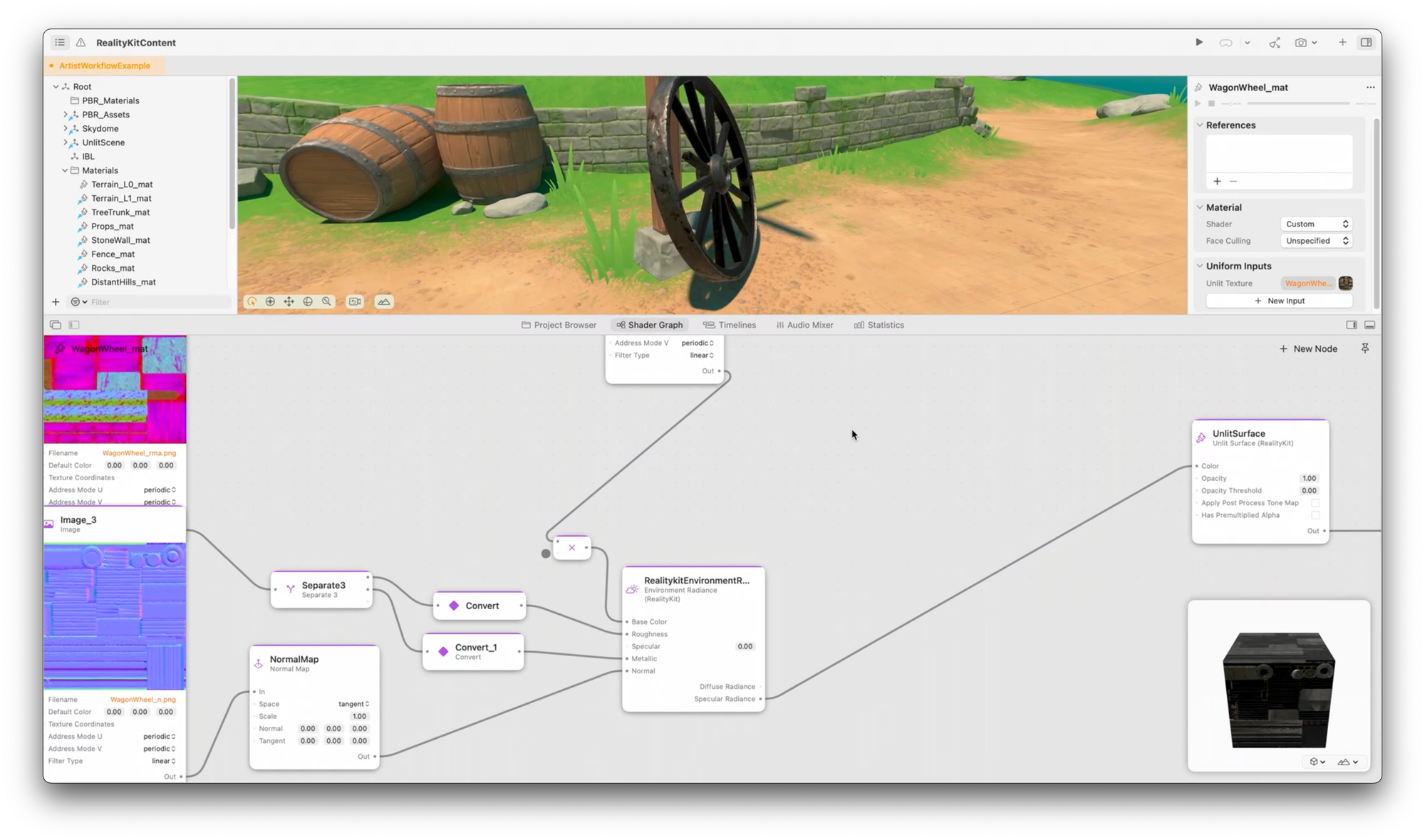

Tooling and pipelines, with several sessions that dealt with the current common problem for teams of how to go from idea to product (what tools?, what formats?, how to present?). Details the process with many helpful tips, elements in between, optimization techniques, debugging, and best practices combined with real-world experiences from other developers. With much more references and statements than ever before.

Screenshots of various sessions displaying technical tips and highlighting the new tools that streamline pipelines and creation overall

Then there are consistent evolutionary steps on shared activities (including custom Persona templates and FaceTime simulation)

A compelling chunk focused on Open standards this year, with outstanding videos about OpenUSD, MaterialX, WebXR, and all the great work done to include new input modes to the standards (transient pointer et al.).

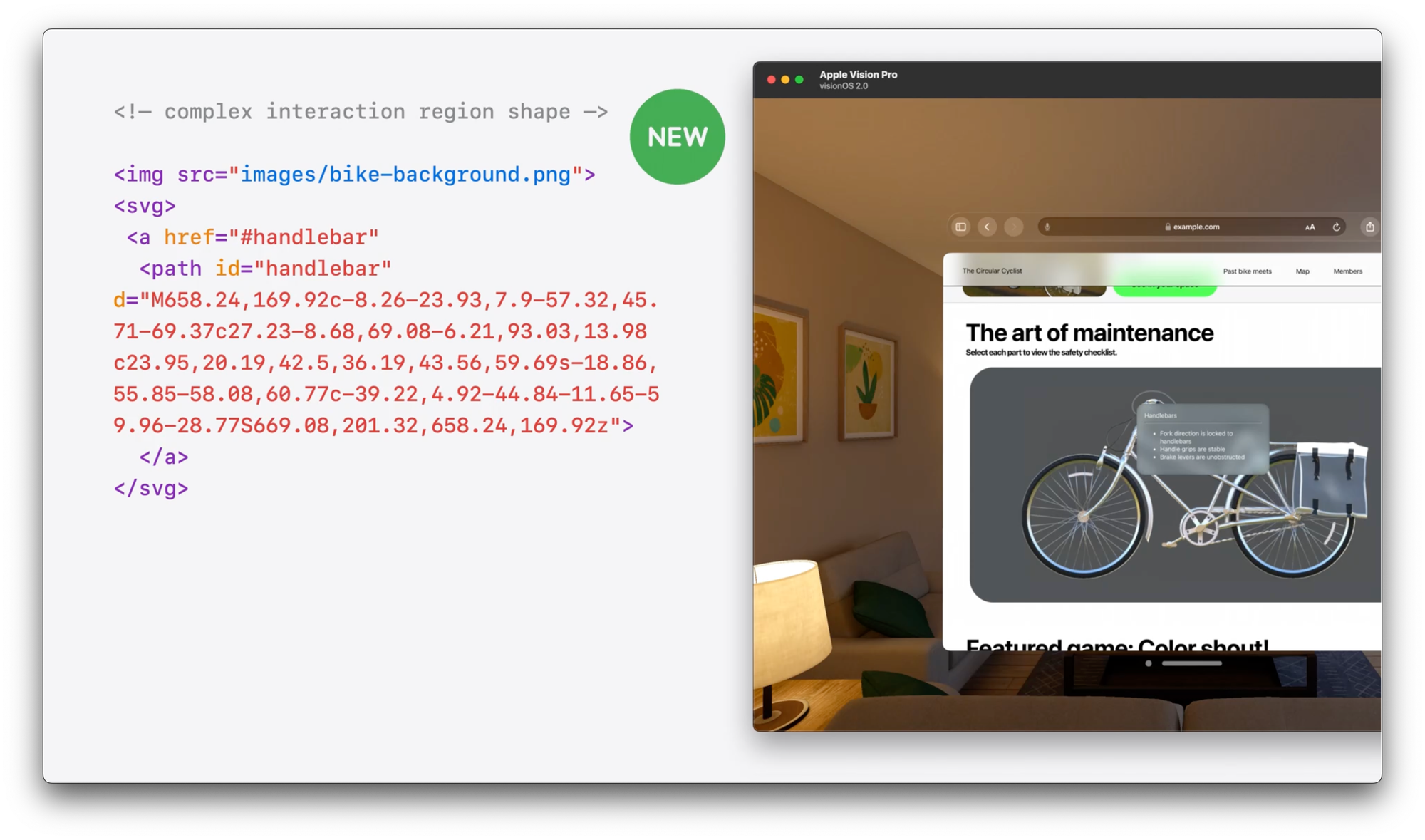

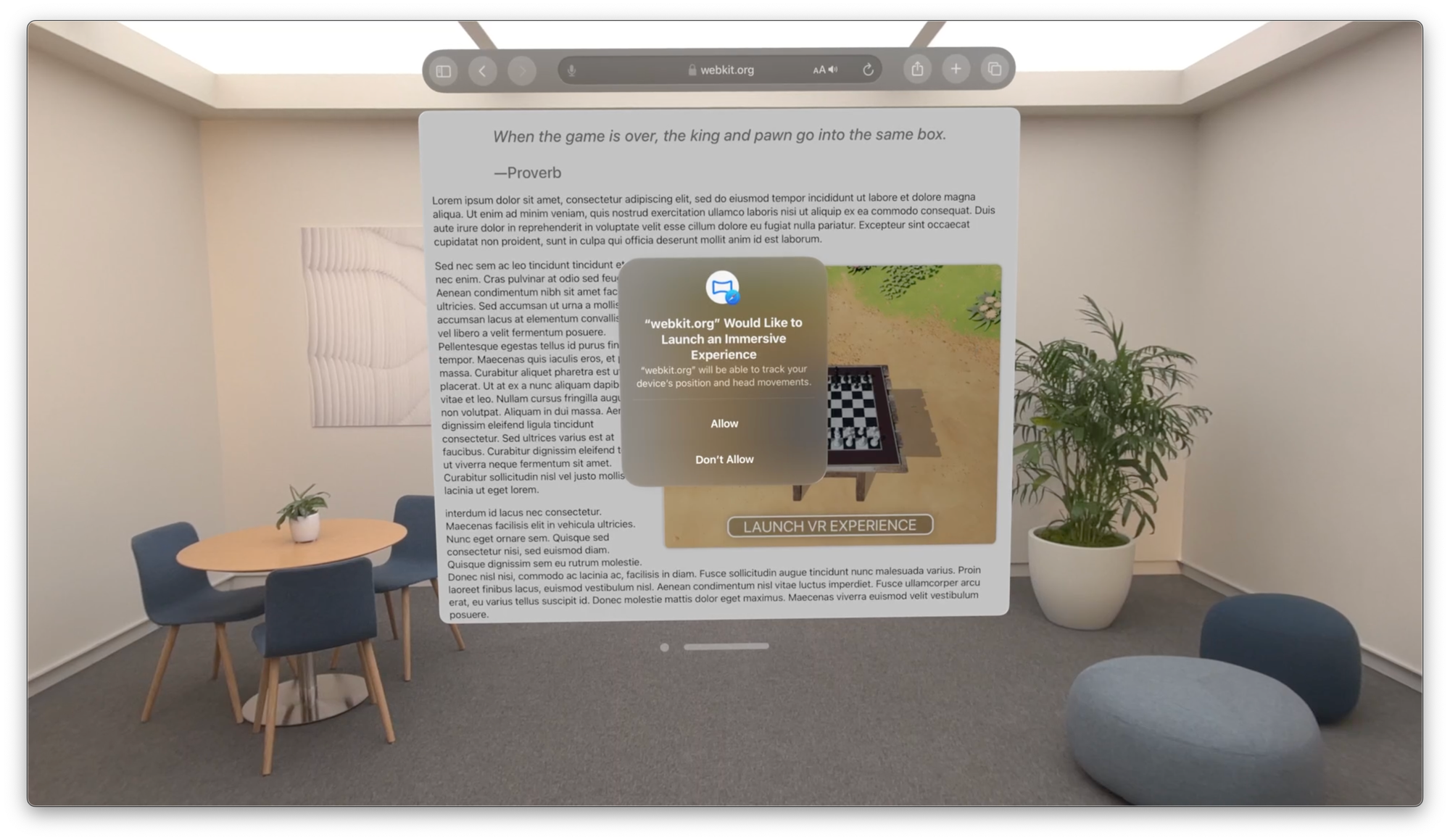

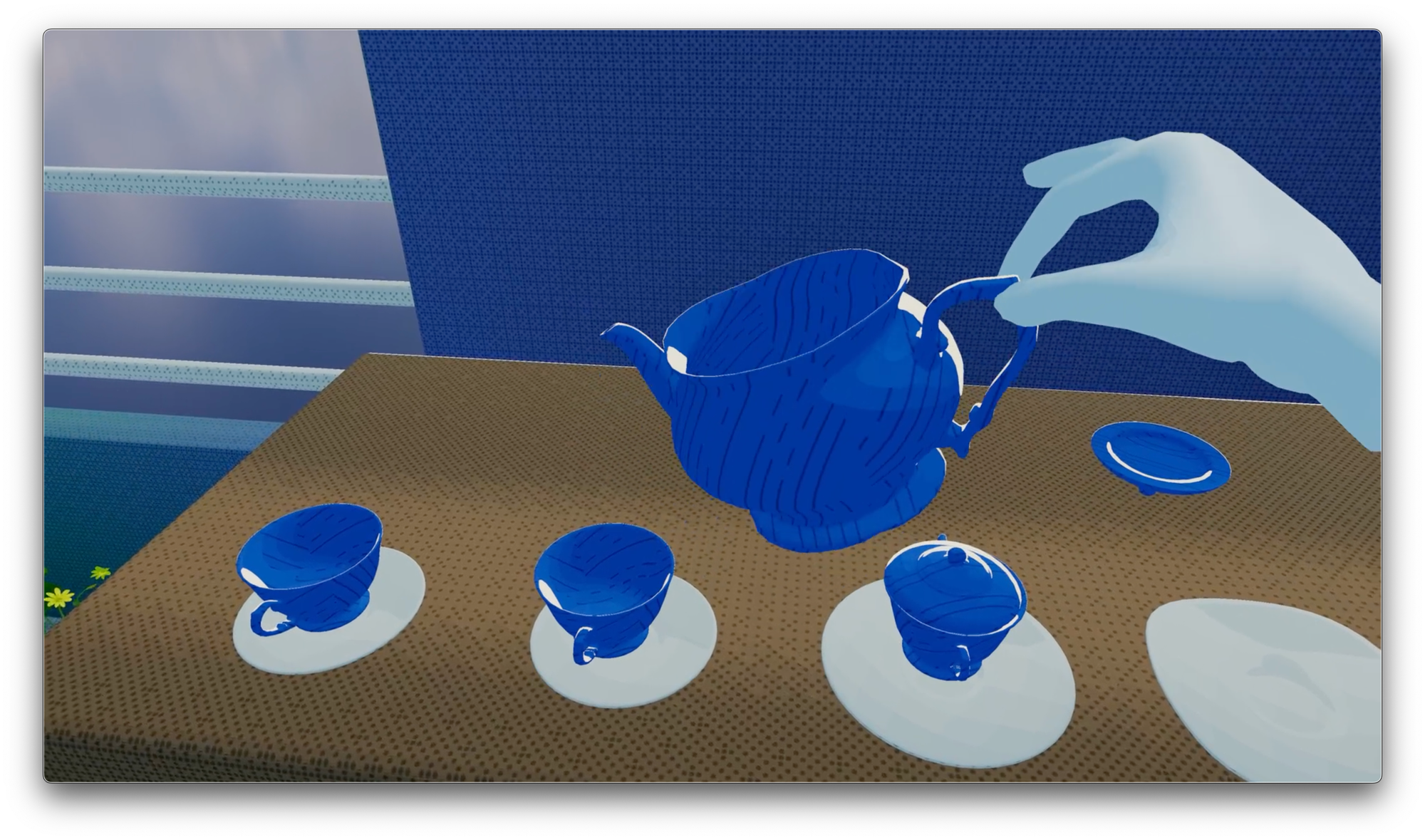

Screenshots showing custom interaction regions for visionOS Safari and numerous WebXR improvements, such as immersive experiences and precise hand tracking

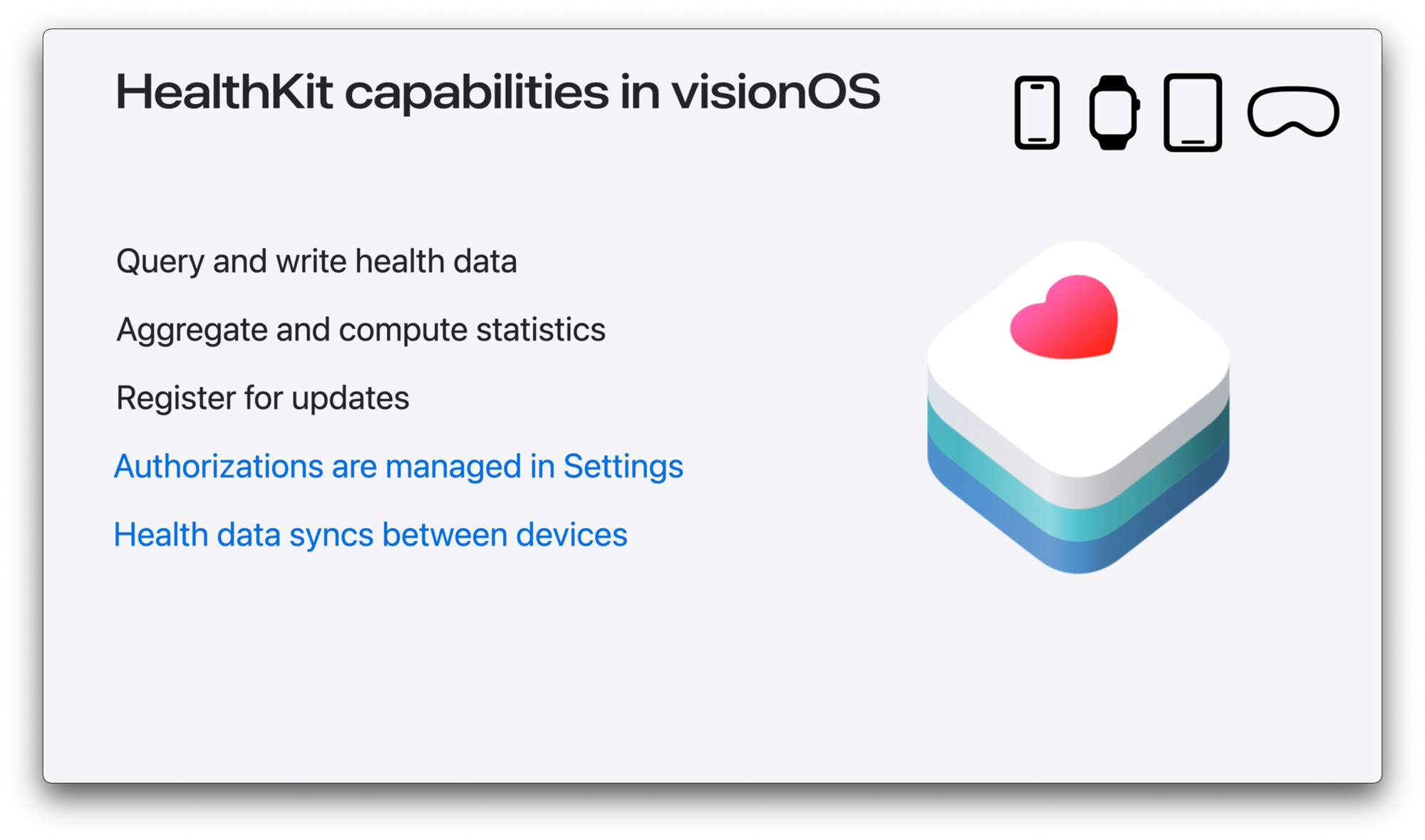

The introduction of HealthKit for visionOS something to pay close attention to in the future.

Eye tracking data is health data. That means it needs to be protected as such, but not necessarily treated as radioactive.

Avi Bar-Zeev

Sound had kept the spot and delivered once again, including Web Audio APIs and WebSpeech API support.

Then there was the backbone but relatively small amount (less than 10% of the total) of sessions dedicated to API expansions.

Productivity?

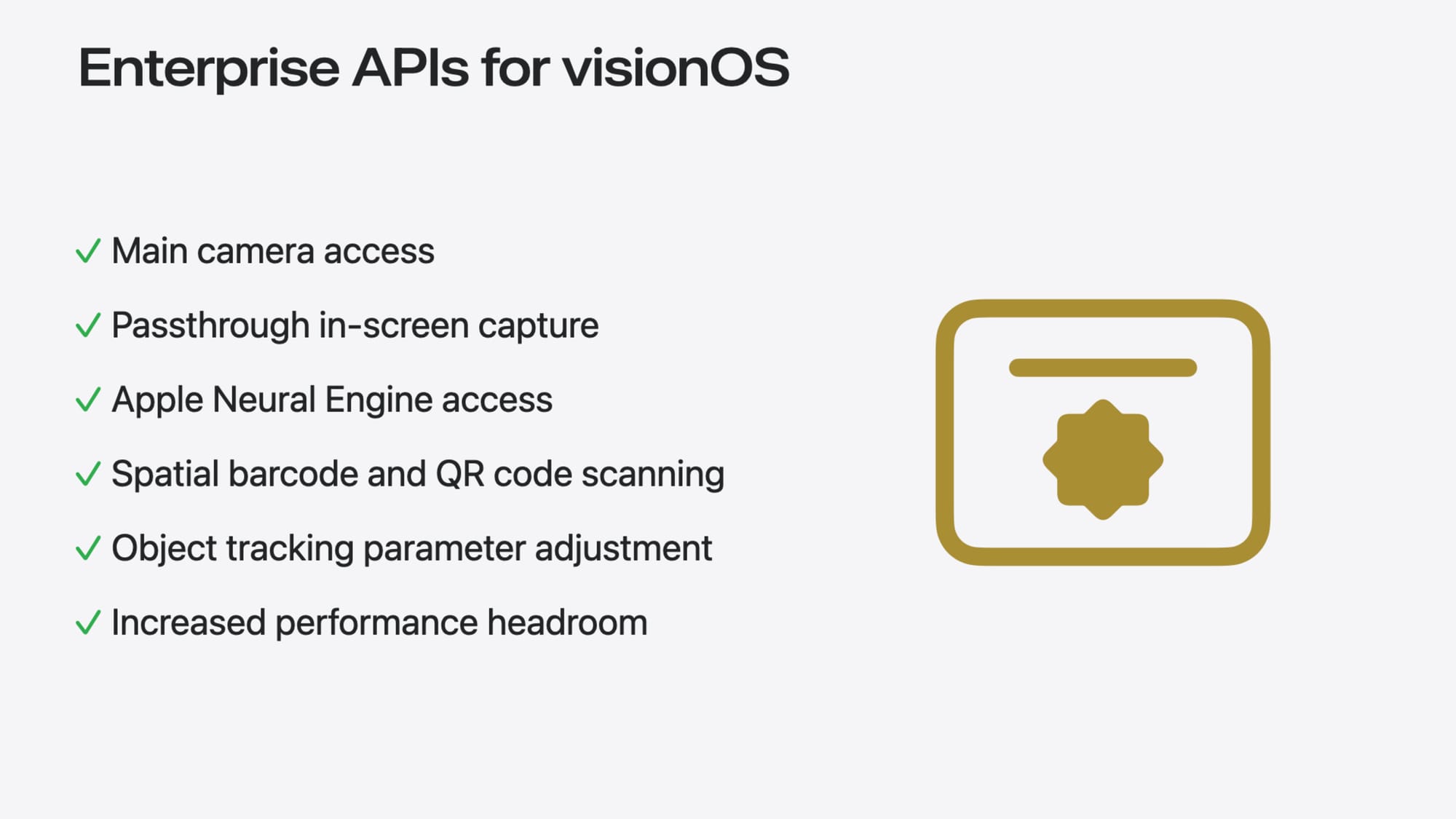

I would be puzzled and would have thought that there was already a different stance this year for the AVP if it weren't for the short but extremely important release of the Enterprise APIs for visionOS

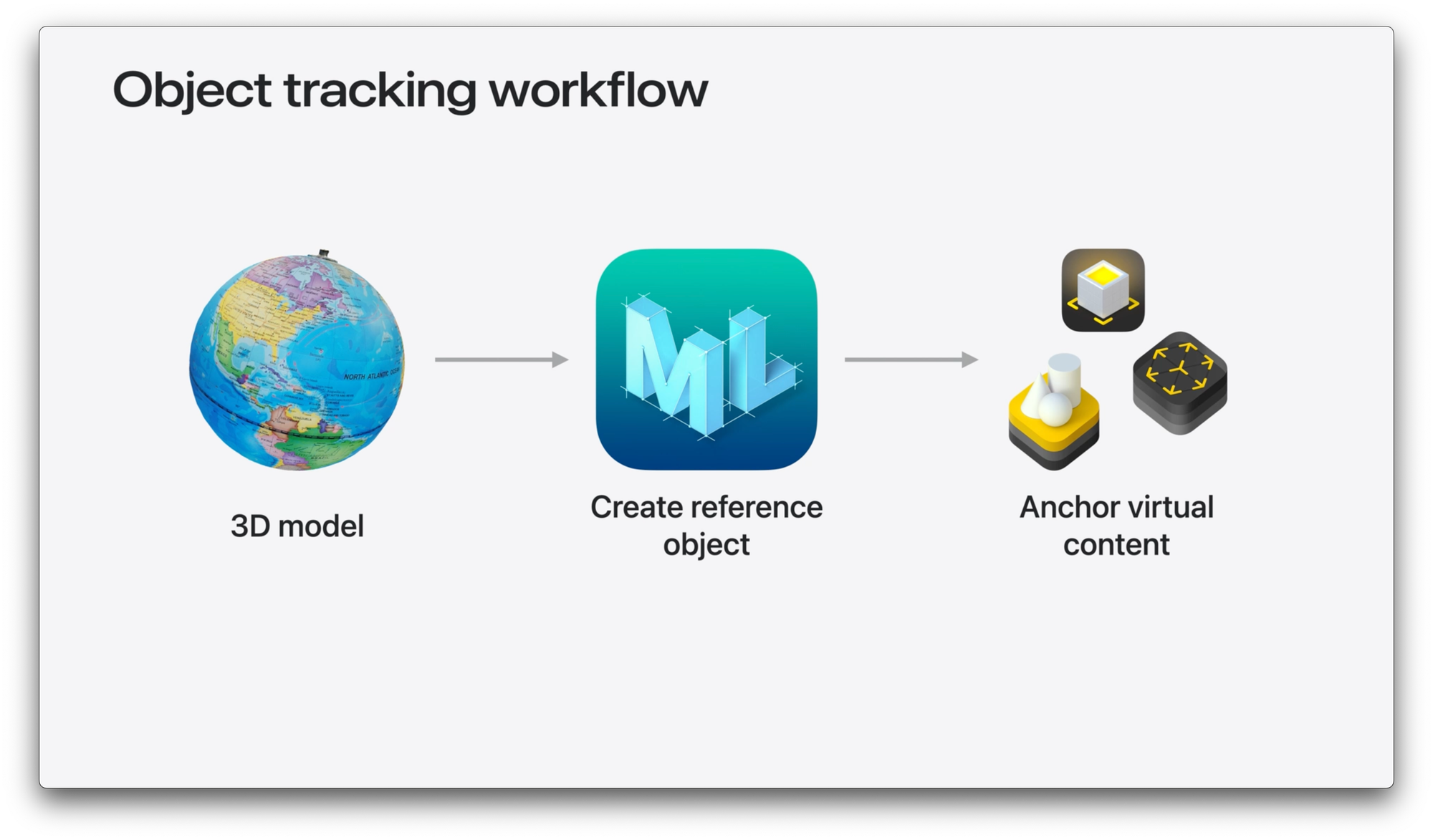

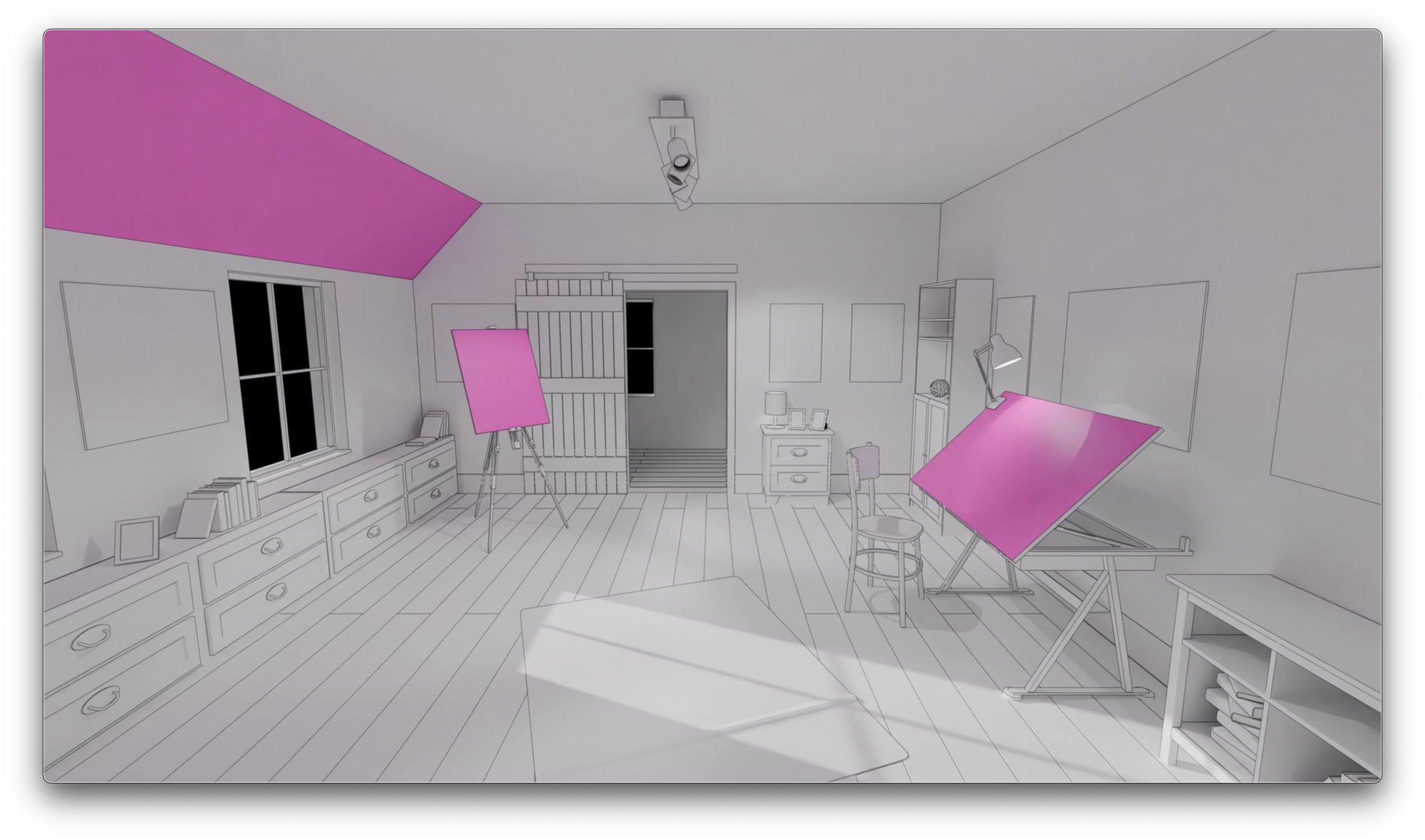

...and the ARKit advancements in object tracking, plane detection, and room tracking.

Object tracking workflow and slanted planes are some of the platform's key developments this year

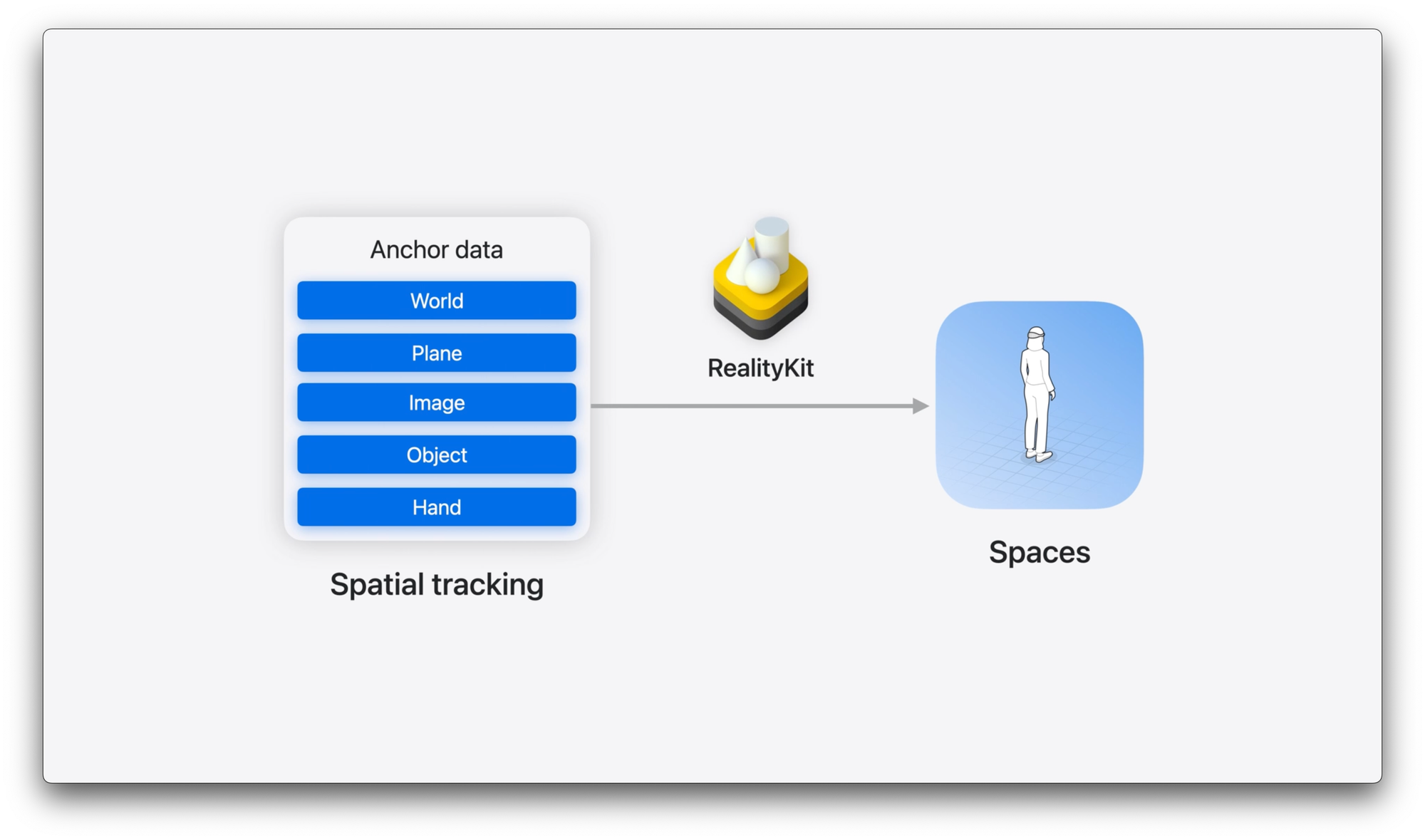

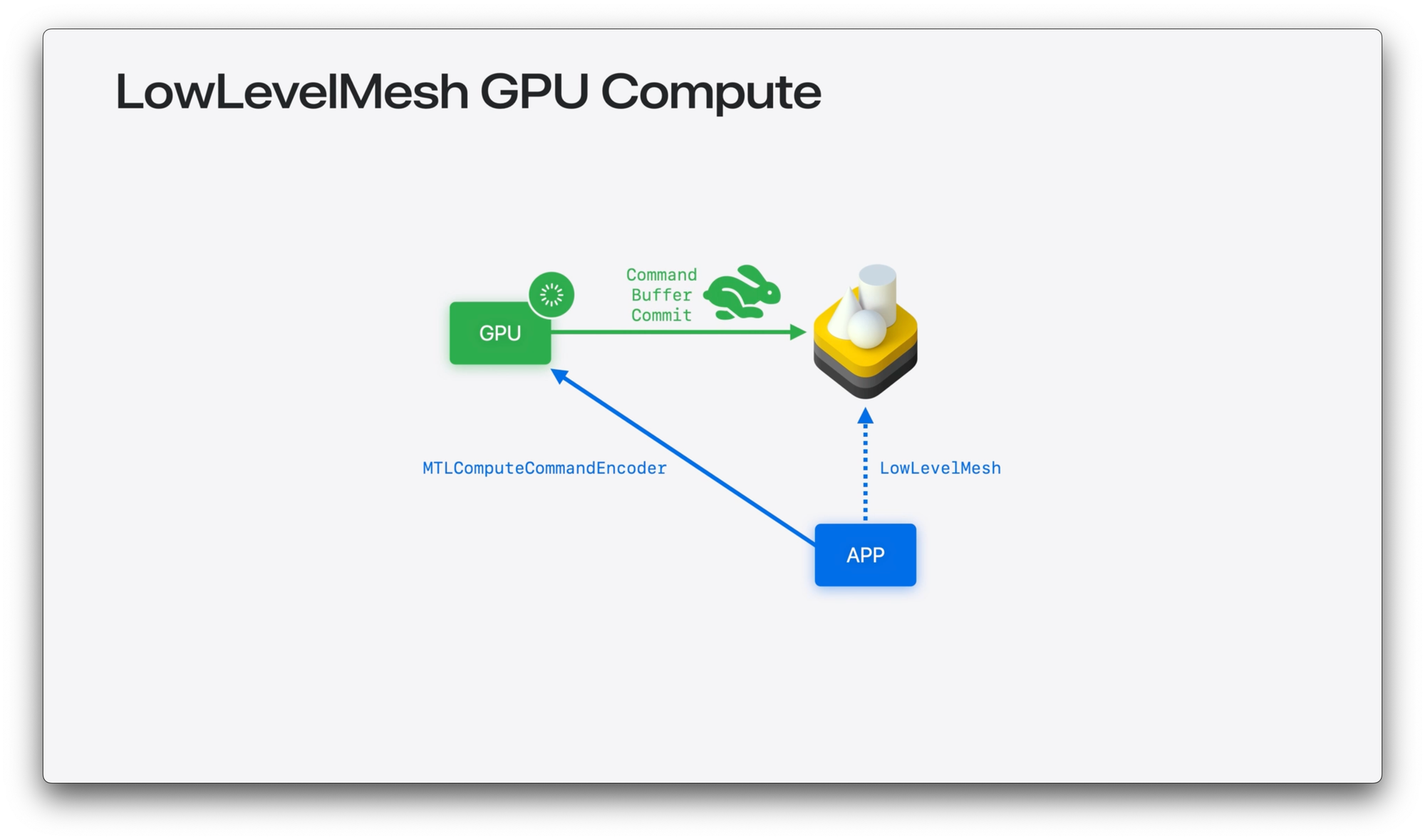

An initial public iteration that validates the closed-by-default policy by only exposing what is explicitly (and loudly) asked for. It's also interesting how various APIs relocated the level, from easing access to Spatial tracking (now possible from RealityKit), extruding SwiftUI paths, to piercing to lower with LowLevelMesh. All of them unexpected and very welcome changes.

Some APIs went to a higher level and some to a lower one. Screenshots of the slide introduction Spatial tracking for RealityKit and LowLevelMesh basic deferred GPU computevi

Missing bits

Accessibility

Dispersed throughout many of the sessions but not addressed directly (on the spatial computing topic at least, although there is a general session). Also lets keep in mind that just a few weeks ago, new features were announced for the Global Accessibility Awareness Day.

Apple Intelligence

However, there is mention of CreateML, custom CV, and access to Neural Engine through enterprise APIs.

NeRF

But the way that object capture scans areas looks a lot like Luma Labs Capture, which makes me think that this will be coming soon.

Science

Last year, we had some fascinating knowledge from cognitive scientists that were notably absent this year.

Other details

- Advancements in guest users, AirPlay, and calibration process

- Ergonomics all around

- Allowing compatible apps to live outside of their folder indicates a high use and a more permissive mindset toward requiring platform adoption

- Node programming appears to be here to stay

- 3D Animation is coming together

- Reality Composer (not Pro) appears to be absorbed by "Actions" together with Shortcuts and Preview features

- We need to learn Blender

- Custom hover effects (⭐)

- If there is restricted world tracking (i.e., low light contexts), a new orientation-based tracking is available at the system level

- Hand Prediction API will improve many experiences

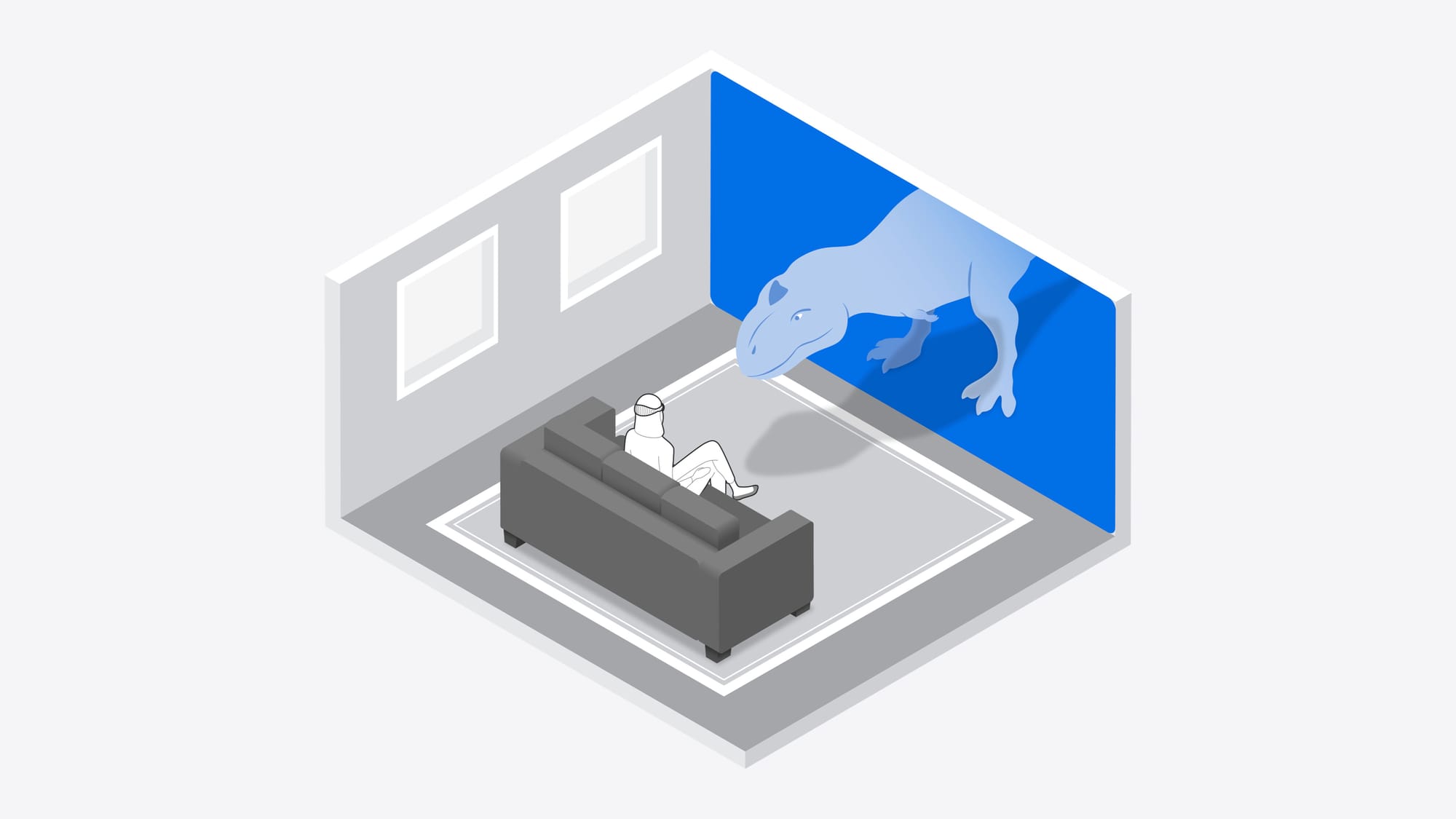

- We now know that the dinosaurs are named Izzy and Roger

- Ornament for volumes

Conclusion

A shorter, practical edition that delivered a more open and uniform set of tools with clear direction and expansion. Developer feedback has been heard and included, with a general stance for the ecosystem that I believe will spark a new wave of powerful and stunning apps while keeping the platform's core values intact.

Bonus

Actionable Steps

- Explore and leverage Enterprise APIs

- Integrate advanced features: Using the new enterprise APIs is a great opportunity. Keep in mind that while these applications will not be available to end users, there are several potential ways to improve your business with capabilities such as custom computer vision and access to the Neural Engine, which will allow for more advanced data processing and analytics.

- Security and Compliance: Defines the appropriate type of device to use for a Spatial Computing product. Apple Vision Pro leads here.

- Improve development and pipelines

- Streamline development: Use the new tooling and pipelines shared at WWDC24 to accelerate your product development cycle, from concept to delivery. Focus on using improved technologies such as RealityKit for spatial tracking and SwiftUI for more frictionless UI integration and product creation overall.

- Adopt open standards: Use standards such as OpenUSD and MaterialX to ensure interoperability and collaboration across multiple platforms and technologies.

- Prioritize Accessibility

- Embed accessibility from the start: Given the absence of direct emphasis in sessions, its important to remember the high priority from the beginning and include accessibility features to satisfy a variety of user demands. Adopt Apple's recently announced upgrades for Global Accessibility Awareness Day.

- Consult accessibility experts: Work with professionals to guarantee that your applications meet accessibility requirements and deliver a smooth experience for all users.

- Leverage HealthKit for visionOS

- Develop health-focused apps: With HealthKit, visionOS opens up new potential to create applications that boost user wellbeing while harnessing spatial computing's unique features.

- Data privacy compliance: Health-focused applications adhere to privacy standards, and managing sensitive data such as eye tracking with diligence and security opens up new opportunities in highly sensitive businesses.

- Re-think entertainment, and improve productivity

- Entertainment apps: Take advantage of visionOS 2.0's emphasis on entertainment and games to create rich, engaging customer experiences.

- Productivity solutions: Use the most recent APIs and technologies to develop powerful productivity apps that improve workflow and user efficiency, including ARKit advances for real-time object and plane identification.